Before you start, we recommend downloading the Social Distancing runtime environment, which contains a recent version of Python and all the packages you need to run the code explained in this post, including OpenCV. To download the runtime environment you will need to create an account on the ActiveState Platform – It’s free and you can use the Platform to create runtime environments for any projects of your own as well!

Given that COVID-19 is showing no signs of slowing down in many regions of the world, it’s more important than ever to maintain “social distancing” aka physical distancing between persons not of the same household in both indoor and outdoor settings. Several national health agencies including the Center for Disease Control (CDC) have recognized that the virus can be spread if “an infected person coughs, sneezes, or talks, and droplets from their mouth or nose are launched into the air and land in the mouths or noses of people nearby.”

To minimize the likelihood of coming in contact with these droplets, it is recommended that any two persons should maintain a physical distance of 1.8 meters apart (approximately 6 feet). Different governing bodies have different rules for safe social distancing and different penalties for breaking them.

We don’t advocate putting in place surveillance to enforce social distancing. Rather, as an interesting exercise, we will use the fact that machine learning and object detection have come a long way in being able to recognize objects in an image. Let’s understand how Python can be used to monitor social distancing.

Python can be used to detect people’s faces in a photo or video loop, and then estimate their distance from each other. While the method we’ll use is not the most accurate, it’s cheap, fast and good enough to learn something about the efficacy of computer vision when it comes to social distancing. Also, this approach is simple enough to be extended to an android compatible solution, so it can be used in the field.

Social Distancing, Python, and Trigonometry

Let’s start by outlining the process to address our use case:

- Pre-Process images taken by a video camera to detect the contours of the components of the images (using Python)

- Classify each contour as a face or not (also using Python)

- Approximate the distance between faces detected by the camera (do you remember your trigonometry?)

To accomplish the Python parts, we’ll use the OpenCV library, which implements most of the state-of-the-art algorithms for computer vision. It’s written in C++ but has bindings for both Python and Java. It also has an Android SDK that could help extend the solution for mobile devices. Such a mobile application might be particularly useful for live, on demand assessments of social distancing using Python.

Steps 1 and 2 in our process are fairly straightforward because OpenCV provides methods to get results in a single line of code. The following code creates a function faces_dist, which takes care of detecting all the faces in an image:

def faces_dist(classifier, ref_width, ref_pix): ratio_px_cm = ref_width / ref_pix cap = cv2.VideoCapture(0) while True: _, img = cap.read() gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) # detect all the faces on the image faces = classifier.detectMultiScale( gray, 1.1, 4) annotate_faces( img, faces, ratio_px_cm) k = cv2.waitKey(30) & 0xff # use ESC to terminate if k==27: break # release the camera cap.release()

After starting the camera in video mode, we enter into an infinite loop to process each image streamed from the video. To simplify identification, the routine transforms the image to grayscale, and then, using the classifier passed as an argument, we get a list of tuples that contains the X, Y coordinates for each face detected along with the corresponding Width and Height which gives us a rectangle.

Next, we call the annotate_faces function, which is in charge of drawing the detected rectangles and calculating the euclidean distance between the objects detected:

def annotate_faces( img, faces, ratio_px_cm ):

points = []

for (x, y, w, h) in faces:

center = (x+(int(w/2)), y+(int(h/2)))

cv2.circle( img, center, 2, (0,255,0),2)

for p in points:

ed = euclidean_dist( p, center ) * ratio_px_cm

color = (0,255,0)

if ed < MIN_DIST:

color = (0,0,255)

# draw a rectangle over each detected face

cv2.rectangle( img, (x, y), (x+w, y+h), color, 2)

# put the distance as text over the face's rectangle

cv2.putText( img, "%scm" % (ed),

(x, h -10), cv2.FONT_HERSHEY_SIMPLEX,

0.5, color, 2)

# draw a line between the faces detected

cv2.line( img, center, p, color, 5)

points.append( center )

cv2.imshow('img', img )

Object Detection Using Python

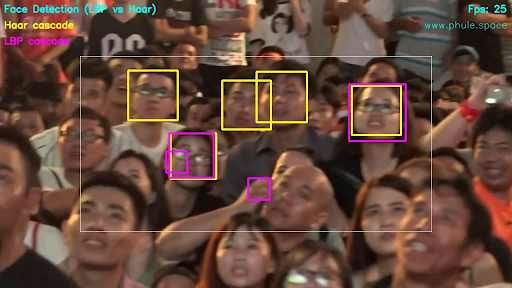

Now that we’re well on our way to solving the problem, let’s step back and review Python’s object detection capabilities in general, and human face detection in particular. We’re using a classifier to do human face detection. In the simplest sense, a classifier can be thought of as a function that chooses a category for a given object. In our case, the classifier is provided by the OpenCV library (of course, you could write your own classifier, but that’s a subject for another time). OpenCV has already been pre-trained against an extensive dataset of images featuring human faces. As a result, it can be very reliable in detecting areas of an image that correspond to human faces. But because real world use cases can differ, OpenCV provides two models for detection:

- Local Binary Pattern (LBP) divides the image into small quadrants (3×3 pixels) and checks if the quadrants surrounding the center are darker or not. If they are, it assigns them to 1; if not 0. Given this information, the algorithm checks a feature vector against the pre-trained ones and returns if an area is a face or not. This classifier is fast, but prone to error with higher false positives.

- Haar Classifier is based on the features in adjacent rectangular sections of the image whose intensities are computed and compared. The Haar-like classifier is slower than LBP, but typically far more accurate.

Our use case for object detection is quite specific since it pertains to social distancing: we need to detect faces, which both classifiers are suited for. But we also need to build an area that represents the most prominent part of the face. That’s because in order to calculate the distance between faces, we first need to approximate the distance the faces are from the camera, which will be based on a comparison of the widths of the facial areas detected.

As a result, we have chosen the Haar Classifier since in our tests the rectangle detected for the face was better than that approximated by LBP.

Approximating Distances

Now that we can reliably detect the faces in each image, it’s time to tackle step 3: calculating the distance between the faces. That is, to approximate the distance between the centroid of each rectangle drawn. To accomplish that, we have to calculate the ratio between pixels and cm measured from a known distance for a known object reference. The reference_pixels function calculates this value which is used in the faces_dist function described earlier:

def reference_pixels(image_path, ref_distance, ref_width): # open reference image image = cv2.imread( image_path ) edged = get_edged( image ) # detect all contours over the gray image allContours = cv2.findContours( edged.copy(), cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE ) allContours = imutils.grab_contours( allContours ) markerContour = get_marker( allContours ) # use the marker width to calculate the focal length of the camera pixels = (cv2.minAreaRect( markerContour ))[1][0] return pixels

We can use the classic Euclidean distance formula between each centroid, and then transform it to measurement units (cm) using the ratio calculated:

D = square_root( (point1_x + point2_x ) ^2 + (point1_y + point2_y ) ^2 )

With this simple formula our annotate_faces function adds the approximation to a line drawn from each centroid. If the approximation is lower than the minimal distance required the line will be red.

Object Detection Programmed for Social Distancing

The complete source code for this example is available in my Github repository. There, you’ll find the code in which we pass three arguments to our Python script:

- The path of the reference image

- The reference distance in centimeters

- The reference width in centimeters

Given these parameters, our code will calculate the focal length of the camera, and then use detect_faces.py to determine the approximate distances between the faces the camera detects. To stop the infinite cycle, just press the ESC key. The GitHub repo also contains reference images and two datasets for OpenCV’s classifiers.

classifier = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

#classifier = cv2.CascadeClassifier('lbpcascade_frontalface_improved.xml')

IMG_SRC = sys.argv[1]

REF_DISTANCE = float(sys.argv[2])

REF_WIDTH = float(sys.argv[3])

FL = largest_marker_focal_len( IMG_SRC, REF_DISTANCE, REF_WIDTH )

faces_dist(classifier, REF_WIDTH, REF_WIDTH, FL)

To try out the code, start by downloading the Social Distancing runtime environment, which contains a recent version of Python and all the packages you need to run my code, including OpenCV.

NOTE: the simplest way to install the environment is to first install the ActiveState Platform’s command line interface (CLI), the State Tool.

If you’re on Windows, you can use Powershell to install the State Tool:

IEX(New-Object Net.WebClient).downloadString('https://platform.www.activestate.com/dl/cli/install.ps1')

If you’re on Linux, run:

sh <(curl -q https://platform.www.activestate.com/dl/cli/install.sh)

Now run the following to automatically create a virtual environment, and then download and install the Social Distancing runtime environment into it:

state activate Pizza-Team/Social-Distancing

To learn more about working with the State Tool, refer to the documentation.

Conclusions

Of course, this is not a production-ready way to ensure social distancing and keep you safe from close talkers. You can improve this solution using more accurate approaches to detect faces (as neural networks created either with OpenCV or Tensorflow), or else using more than a single camera (similar to how kinect works). But for our purposes of object detection, we have a working solution with relatively minimal effort.

Related Blogs:

How to Build a Generative Adversarial Network (GAN) to Identify Deepfakes