If the title of this post caught your eye, you may be wondering what Natural Language Processing (NLP) has to do with two of Sesame Street’s popular characters, but NLP is being revolutionized by Google’s BERT and Baidu’s ERNIE language frameworks.

NLP is at the heart of common technologies you probably use every day, including:

- Siri on your iPhone or Alexa with your Echo, which respond to your voice input

- Chatbots, which attempt to respond to your chat messages

- Spam filters, which attempt to determine whether email message content is valid

- Autocomplete and spell checking features that provide suggestions based on trying to understand message context

- And many more

This post will introduce you to two of the newest – and most successful to date – NLP frameworks, including how they work and what the differences are between them. I’ll also give you some clues as to which framework might be best for which kind of NLP task you might be undertaking.

But first, a little background.

Introducing BERT and ERNIE

BERT and ERNIE are unsupervised pre-trained language models (or frameworks) that are used extensively by the NLP community.

BERT for Natural Language Processing Modeling

It all started when BERT, which stands for Bidirectional Encoder Representations from Transformers, was developed by the Google AI Language Team.

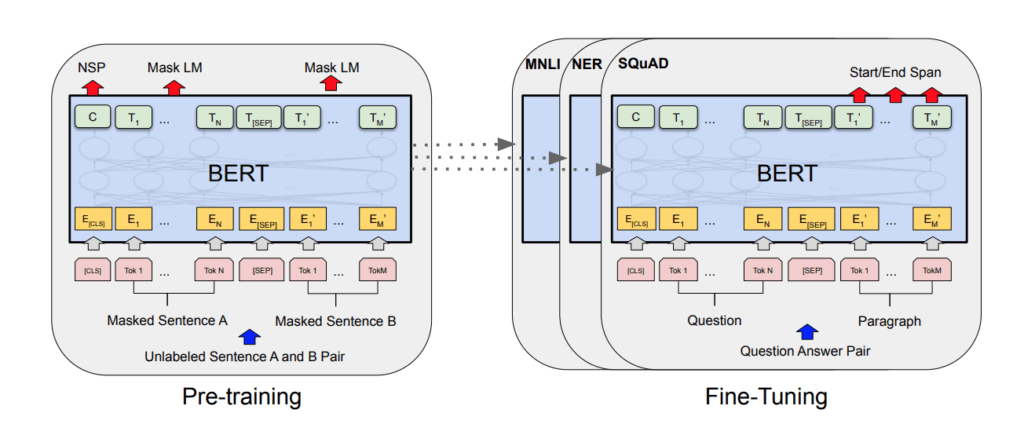

BERT works via an attention mechanism named Transformer, which learns contextual relations between words and sub-words in a text. Transformer has two separate mechanisms:

- An encoder for reading text input

- A decoder, which produces a prediction for the task

BERT’s goal is to generate a language model, so only the encoder mechanism is needed here.

Contrary to directional models, which read the text input sequentially (left-to-right / right-to-left), the Transformer encoder reads the complete sequence of all words at once. This feature allows the model to learn the context of a word from the other words that appear both to the left and right of that word. This is made possible via two new training strategies called Masked Language Model (MLM) and Next Sentence Prediction (NSP), which are briefly described here.

You can find more details about Transformer in a paper by Google.

ERNIE for Natural Language Processing Modeling

Early in 2019, Baidu introduced ERNIE (Enhanced Representation through kNowledge IntEgration), a novel knowledge integration language representation model getting a lot of praise in the NLP community because it outperforms Google’s BERT in multiple Chinese language tasks.

In July 2019, Baidu introduced the successor of ERNIE, ERNIE 2.0 which greatly outperforms not only their first version, but also BERT and another pre-training model known as XLNet. But how does ERNIE 2.0 work?

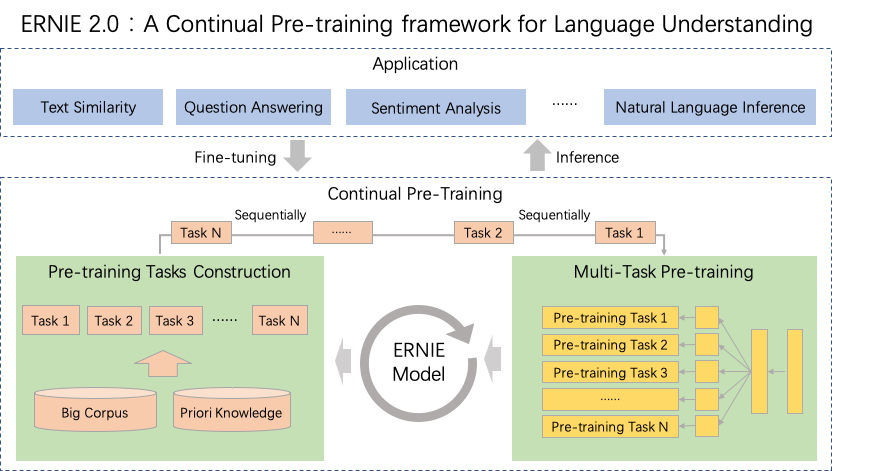

ERNIE 2.0 is a continual pre-training framework, which uses multi-task learning (as seen in the following figure) to integrate lexical, syntactic and semantic information from big data, thereby enhancing its existing knowledge base.

In fact, ERNIE 2.0`s multitasking framework enables the addition of multiple NLP tasks at any time.

Multitasking allows ERNIE 2.0 to:

- Incrementally train on several new tasks in sequence

- Accumulate the knowledge learned for use in future tasks

- Continue to apply learnings while still learning new things with each task

In other words, ERNIE 2.0 is learning how to learn, and continually expanding what it knows. This is similar to the ways humans learn, so this is a big step in Natural Language Processing.

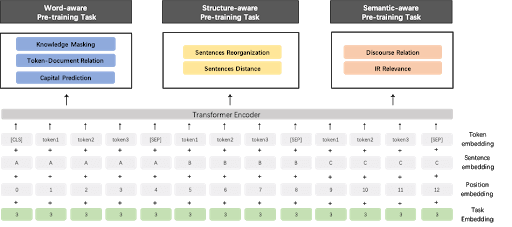

ERNIE 2.0 , like BERT, utilizes a multi-layer transformer. The transformer captures the contextual information for each token in the sequence through self-attention, and generates a sequence of contextual embeddings. Per sequence, a special classification embedding [CLS] is added to the first place of the sequence. Also, the symbol of [SEP] is added as the separator in the intervals of the segments for the multiple input segment tasks.

So now that we know a little more about how BERT and ERNIE work, what NLP tasks do they uniquely allow us to process better?

NLP Use Cases

To understand NLP use cases, we first need to understand NLP.

NLP is the study of the interactions between human language and computers. It combines computer science, artificial intelligence, and computational linguistics, which is a very complex field with a lot of use cases.

Typical NLP tasks include:

- Automatic Summarization – the process of generating a concise and meaningful summary of text from multiple sources

- Translation – not a simple word-for-word replacement, but the ability to accurately translate the complexity and ambiguity inherent in one language to another

- Named Entity Recognition – a subtask that seeks to locate and classify predefined categories such as names, medical codes, locations, monetary values, etc

- Relationship Extraction – identifies semantic relationships between two or more entities (e.g. married to, employed by, lives in, etc)

- Sentiment Analysis – the analysis of one or more source texts in order to determine whether they have a positive, negative or neutral tone

- Speech Recognition – the ability to recognize not only what was said, but also to determine the intent of what was said

- Topic Segmentation – deciding where transitions from one topic to another are located within a document

- And many more

Over the past decades, each of these use cases has seen varying degrees of success, from the dictation software of yesteryear to modern day digital assistants, but they still have a long way to go.

Both BERT and ERNIE can help. Because they`re both pre-trained language models, all we need to do is simply add the data for each of these use cases.

How to Use BERT for Natural Language Processing

Using BERT for any specific NLP use case is fairly straightforward. In fact, BERT can tackle a variety of language tasks, while only adding a small layer to its core model:

- In Named Entity Recognition (NER), the software receives a text sequence, and now has to mark the various types of entities appearing in the text. With BERT, the training of a NER model is as simple as feeding the output vector of each token into a classification layer that predicts the NER label.

- In Question Answering tasks (e.g. (Stanford Question Answering Dataset aka SQuAD v1.1), the software receives a question regarding a text sequence and is required to mark the answer in the sequence. Using BERT, a Q&A model can be trained by learning two extra vectors that mark the beginning and the end of the answer.

- Classification tasks (eg. sentiment analysis) are done in the same manner as Next Sentence classification.

Using ERNIE for Natural Language Processing

When the ERNIE 2.0 model was tested by Baidu, three different kinds of NLP tasks were constructed: word-aware, structure-aware and semantic-aware pre-training tasks:

- The word-aware tasks (eg. Knowledge Masking and Capitalization Prediction) allow the model to capture the lexical information

- The structure-aware tasks (e.g., Sentence Reordering and Distance) capture the syntactic information of the corpus

- The semantic-aware tasks (e.g., Discourse Relation, Relevance) allow the model to learn semantic information

For more information on testing, refer to Baidu’s paper.

Natural Language Processing: BERT vs. ERNIE

Baidu made a comparison of the performance of ERNIE 2.0, BERT and XLNet using pre-trained models for the English dataset GLUE (General Language Understanding Evaluation), as well as 9 popular Chinese datasets. Pre-training was done against data from Wikipedia, BookCorpus, Reddit, as well as many others.

The results show that the ERNIE 2.0 model outperforms BERT and XLNet on 7 GLUE NLP tasks, and outperforms BERT on all 9 Chinese NLP tasks (e.g., Sentiment Analysis, Question Answering, etc.).

Given the results, it seems hard not to conclude that ERNIE 2.0 trumps BERT, but both ERNIE 2.0 and BERT are recent innovations in the use of language understanding systems:

- They both take approaches that have never been tried before in language representation modeling. BERT is as unique in its bidirectional approach, as ERNIE 2.0 is in its continual, multi-task learning approach.

- They can both be used against English and Chinese languages, which are structurally and semantically totally different from each other.

While the BAIDU paper clearly gives the win to ERNIE 2.0 for English and Chinese languages, I wonder, how would they perform against other languages like Japanese, Finnish, German, etc.

More research needs to be done with a wider range of languages before any conclusion can really be reached regarding whether ERNIE 2.0 trumps BERT for language modeling.

Related Blogs:

Customer Support Chatbots: Easier & More Effective Than You Think