In this blog: Learn how to build a personal assistant using Python, for your application. Download the Virtual Assistant runtime environment and build your very own digital virtual assistant.

This tutorial will walk you through the basics of building your own digital virtual assistant in Python, complete with voice activation plus response to a few basic inquiries. From there, you can customize it to perform whatever tasks you need most.

The rise of automation, along with increased computational power, novel application of statistical algorithms, and improved accessibility to data, have resulted in the birth of the personal digital assistant market, popularly represented by Apple’s Siri, Microsoft’s Cortana, Google’s Google Assistant, and Amazon’s Alexa.

While each assistant may specialize in slightly different tasks, they all seek to make the user’s life easier through verbal interactions so you don’t have to search out a keyboard to find answers to questions like “What’s the weather today?” or “Where is Switzerland?”. Despite the inherent “cool” factor that comes with using a digital assistant, you may find that the aforementioned digital assistants don’t cater to your specific needs. Fortunately, it’s relatively easy to build your own.

Digital Virtual Assistant in Python – Step 1: Installing Python

To follow along with the code in this tutorial, you’ll need to have a recent version of Python installed. I’ll be using ActivePython, for which you have two choices:

- Download and install the pre-built Virtual Assistant runtime environment (including Python 3.6) for Windows 10, macOS, CentOS 7, or…

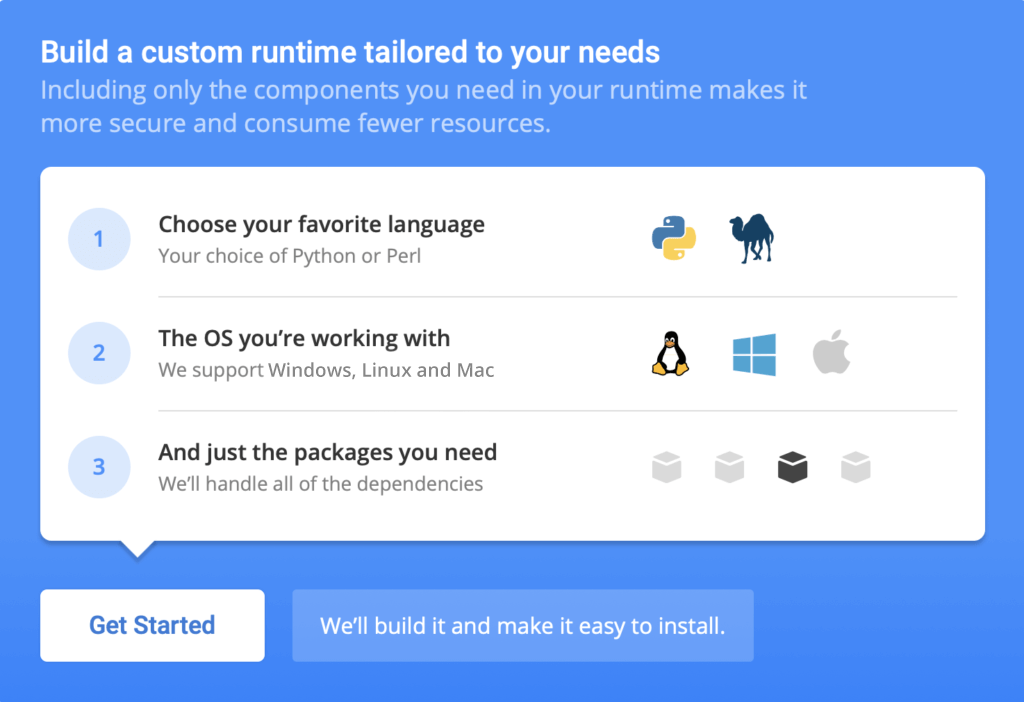

- Build your own custom Python runtime with just the packages you’ll need for this project, by creating a free ActiveState Platform account, after which you will see the following image:

Click the Get Started button and choose Python 3.6 and the OS you’re working in. In addition to the standard packages included in ActivePython, we’ll need to add a few third party packages, including something that can do speech recognition, convert text to speech and playback audio:

- Speech Recognition Package – when you voice a question, we’ll need something that can capture it. The

SpeechRecognitionpackage allows Python to access audio from your machine’s microphone, transcribe audio, save audio to an audio file, and other similar tasks. - Text to Speech Package – our assistant will need to convert your voiced question to a text one. And then, once the assistant looks up an answer online, it will need to convert the response into a voiceable phrase. For this purpose, we’ll use the

gTTSpackage (Google Text-to-Speech). This package interfaces with Google Translate’s API. More information can be found here. - Audio Playback Package – All that’s left is to give voice to the answer. The

mpyg321package allows for Python to play MP3 files.

Once the runtime builds, you can download the State Tool and use it to install your runtime into a virtual environment.

And that’s it! You now have Python installed, as well as everything you need to build the sample application. In doing so, ActiveState takes the (sometimes frustrating) environment setup and dependency resolution portion out of your hands, allowing you to focus on actual development.

All the code used in this tutorial can be found in my Github repo.

All set? Let’s go.

Digital Virtual Assistant in Python – Step 2: Voice Input

The first step in creating your own personal digital assistant is establishing voice communication. We’ll create two functions using the libraries we just installed: one for listening and another for responding. Before we do so, let’s import the libraries we installed, along with a few of the standard Python libraries:

import speech_recognition as sr from time import ctime import time import os from gtts import gTTS import requests, json

Now let’s define a function called listen. This uses the SpeechRecognition library to activate your machine’s microphone, and then converts the audio to text in the form of a string. I find it reassuring to print out a statement when the microphone has been activated, as well as the stated text that the microphone hears, so we know it’s working properly. I also include conditionals to cover common errors that may occur if there’s too much background noise, or if the request to the Google Cloud Speech API fails.

def listen():

r = sr.Recognizer()

with sr.Microphone() as source:

print("I am listening...")

audio = r.listen(source)

data = ""

try:

data = r.recognize_google(audio)

print("You said: " + data)

except sr.UnknownValueError:

print("Google Speech Recognition did not understand audio")

except sr.RequestError as e:

print("Request Failed; {0}".format(e))

return data

For the voice response, we’ll use the gTTS library. We’ll define a function respond that takes a string input, prints it, then converts the string to an audio file. This audio file is saved to the local directory and then played by your operating system.

def respond(audioString):

print(audioString)

tts = gTTS(text=audioString, lang='en')

tts.save("speech.mp3")

os.system("mpg321 speech.mp3")

The listen and respond functions establish the most important aspects of a digital virtual assistant: the verbal interaction. Now that we’ve got the basic building blocks in place, we can build our digital assistant and add in some basic features.

Digital Virtual Assistant in Python – Step 3: Voiced Responses

To construct our digital assistant, we’ll define another function called digital_assistant and provide it with a couple of basic responses:

def digital_assistant(data):

if "how are you" in data:

listening = True

respond("I am well")

if "what time is it" in data:

listening = True

respond(ctime())

if "stop listening" in data:

listening = False

print('Listening stopped')

return listening

return listening

This function takes whatever phrase the listen function outputs as an input, and checks what was said. We can use a series of if statements to understand the voice query and output the appropriate response. To make our assistant seem more human, the first thing we’ll add is a response to the question “How are you?” Feel free to change the response to your liking.

The second basic feature included is the ability to respond with the current time. This is done with the ctime function from the time package.

I also build in a “stop listening” command to terminate the digital assistant. The listening variable is a Boolean that is set to True when the digital assistant is active, and False when not. To test it out, we can write the following Python script, which includes all the previously defined functions and imported packages:

time.sleep(2)

respond("Hi Dante, what can I do for you?")

listening = True

while listening == True:

data = listen()

listening = digital_assistant(data)

Save the script as digital_assistant.py. Before we run the script via the command prompt, let’s check that ActiveState Python is running correctly by entering the following on the command line:

$ Python3.6

If ActivePython installed correctly, you should obtain an output that looks like this:

ActivePython 3.6.6.3606 (ActiveState Software Inc.) based on Python 3.6.6 (default, Dec 19 2018, 08:04:03) [GCC 4.2.1 Compatible Apple LLVM 9.0.0 (clang-900.0.39.2)] on darwin Type "help", "copyright", "credits" or "license" for more information.

Note that if you have other versions of Python already installed on your machine, ActivePython may not be the default version. For instructions on how to make it the default for your operating system, ActiveState provides procedures here.

With ActivePython now the default version, we can run the script at the command prompt using:

$ Python3.6 digital_assistant.py

You should see and hear the output:

Hi Dante, what can I do for you? I am listening...

Now you can respond with one of the three possibilities we defined in the digital_assistant function, and it will respond appropriately. Cool, right?

How to Create a Digital Assistant Google Maps Query

I find myself frequently wondering where a certain city or country is with respect to the rest of the world. Typically this means I open a new tab in my browser and search for it on Google Maps. Naturally, if my new digital assistant could do this for me, it would save me the trouble.

To implement this feature, we’ll add a new if statement into our digital_assistant function:

def digital_assistant(data):

if "how are you" in data:

listening = True

respond("I am well")

if "what time is it" in data:

listening = True

respond(ctime())

if "where is" in data:

listening = True

data = data.split(" ")

location_url = "https://www.google.com/maps/place/" + str(data[2])

respond("Hold on Dante, I will show you where " + data[2] + " is.")

maps_arg = '/usr/bin/open -a "/Applications/Google Chrome.app" ' + location_url

os.system(maps_arg)

if "stop listening" in data:

listening = False

print('Listening stopped')

return listening

return listening

The new if statement picks up if you say “where is” in the voice query, and appends the next word to a Google Maps URL. The assistant replies, and a command is issued to the operating system to open Chrome with the given URL. Google Maps will open in your Chrome browser and display the city or country you inquired about. If you have a different web browser, or your applications are in a different location, adapt the command string accordingly.

How to Create a Digital Assistant Weather Query

If you live in a place where the weather can change on a dime, you may find yourself searching for the weather every morning to ensure that you are adequately equipped before leaving the house. This can eat up significant time in the morning, especially if you do it every day, when the time can be better spent taking care of other things.

To implement this within our digital assistant, we’ll add another if statement that recognizes the phrase “What is the weather in..?”

def digital_assistant(data):

global listening

if "how are you" in data:

listening = True

respond("I am well")

if "what time is it" in data:

listening = True

respond(ctime())

if "where is" in data:

listening = True

data = data.split(" ")

location_url = "https://www.google.com/maps/place/" + str(data[2])

respond("Hold on Dante, I will show you where " + data[2] + " is.")

maps_arg = '/usr/bin/open -a "/Applications/Google Chrome.app" ' + location_url

os.system(maps_arg)

if "what is the weather in" in data:

listening = True

api_key = "Your_API_key"

weather_url = "http://api.openweathermap.org/data/2.5/weather?"

data = data.split(" ")

location = str(data[5])

url = weather_url + "appid=" + api_key + "&q=" + location

js = requests.get(url).json()

if js["cod"] != "404":

weather = js["main"]

temp = weather["temp"]

hum = weather["humidity"]

desc = js["weather"][0]["description"]

resp_string = " The temperature in Kelvin is " + str(temp) + " The humidity is " + str(hum) + " and The weather description is "+ str(desc)

respond(resp_string)

else:

respond("City Not Found")

if "stop listening" in data:

listening = False

print('Listening stopped')

return listeningtime.sleep(2)

respond("Hi Dante, what can I do for you?")

listening = True

while listening == True:

data = listen()

listening = digital_assistant(data)

For the weather query to function, it needs a valid API key to obtain the weather data. To get one, go here and then replace Your_API_key with the actual value. Once we concatenate the URL string, we’ll use the requests package to connect with the OpenWeather API. This allows Python to obtain the weather data for the input city, and after some parsing, extract the relevant information.

Customizing your Digital Virtual Assistant in Python

There is a myriad of digital assistants currently on the market, including:

- Google Assistant and Siri, which focus primarily on helping users with non-work related tasks like leisure and fitness.

- Cortana, which focuses on work efficiency.

- Alexa, which is more concerned with retail.

With modest expectations in mind, each does its job relatively well. If you require more specificity, designing your own digital assistant is far from a pipe dream. Recent advances in speech recognition and converting text to speech make it viable even for hobbyists. And working in Python greatly simplifies the task, giving you the ability to make any number of customization to tailor your assistant to your needs.

- Sign up for a free ActiveState Platform account so you can download the Virtual Assistant runtime environment and build your very own digital virtual assistant.

Related Blogs:

Customer Support Chatbots: Easier & More Effective Than You Think

Frequently Asked Questions

How do I create a Python virtual assistant?

- Install the pre-built Virtual Assistant Python environment.

- Create two voice communication functions, one for listening and another for responding.

- Create voiced responses to the kinds of questions you want your assistant to answer.

- Create the lookup routines the virtual assistant will perform when asked a specific question.

That’s it! Just follow the included code samples to make your assistant as simple or complicated as you like.

Create a free ActiveState Platform account so you can download the Virtual Assistant Python environment.

What can a Python virtual assistant do?

- Current time

- Local weather

- Song/podcast playback

- Turn on smart appliances/lights

- Phone calling

- Text writing

- News updates

- Internet searches

Learn more in the article above, or learn how to build a recommendation engine in Python.

How do I get my own Python desktop assistant?

Install the pre-built Virtual Assistant Python environment and follow the instructions in this post to build your own Python virtual assistant.

Why should I use Python to build a virtual assistant?

Install our pre-built Virtual Assistant Python environment and follow the instructions in this post to build your own Python virtual assistant.