In this blog: Join the Kaggle COVID-19 Research Challenge by downloading and installing the pre-built “Kaggle COVID Challenge” runtime, which contains a version of Python and just the data science packages you need to get started.

Python programming has been used to support healthcare for decades. But how is Python helping in COVID research? How can you as a programmer or a data scientist contribute to it?

The Kaggle COVID-19 Challenge is in response to a significant portion of the global community being affected by the COVID-19 pandemic. It addresses the need for research and comprehensive, transparent data surrounding the origin, transmission, and lifecycle of the virus. This research data is essential for making educated decisions about how to prevent and treat COVID-19 infections.

For the first time in the history of pandemics, we can use the power of computers and data science to sift through the vast amount of data related to a virus in the hopes of discovering insights that would otherwise go unnoticed. In light of this, a coalition of leading research groups has compiled a public dataset so that an international community of researchers, programmers, and data scientists can join the fight.

The dataset is hosted on Kaggle, where the coalition put together a friendly competition to steer the participants towards common goals. The tasks of this competition are intended to produce useful insights for the global medical community.

In this article, I’ll use Python to analyze some of the COVID-19 Open Research Challenge dataset in order to discover meaningful insights that can help the medical community in the fight against the coronavirus.

Using Python for COVID Research

To follow along with the code in this article, you’ll need to have a recent version of Python installed. We recommend downloading and installing the pre-built “Kaggle COVID Challenge” runtime, which contains a version of Python and just the packages used in this post. You can get a copy for yourself by doing the following:

- Install the State Tool on Windows using Powershell:

IEX(New-Object Net.WebClient).downloadString('https://platform.www.activestate.com/dl/cli/install.ps1')or install the State Tool on Linux / Mac:sh <(curl -q https://platform.www.activestate.com/dl/cli/install.sh) - Run the following command to download the build and automatically install it into a virtual environment:

state activate Pizza-Team/Kaggle-COVID-Challenge

All of the code used in this article can be found on my GitLab repository.

All set? Let’s go.

Machine Learning with COVID Datasets

The COVID-19 Open Research Dataset (CORD-19) consists of over 128,000 academic articles. This includes the full text of over 59,000 articles on topics including COVID-19, SARS-CoV-2, and other coronaviruses. With the onset of COVID-19, the number of scientific publications relating to the virus has increased rapidly in recent months and continues to grow. The medical community has trouble keeping up with the sheer number of publications, as only so many can be properly digested to extract any meaningful insights. Fortunately, Machine Learning (ML) algorithms are designed precisely for problems such as this.

Due to the text-based nature of the dataset, the use of Natural Language Processing (NLP) is an appropriate technique to use to sift through the vast number of publications. Python supports a number of NLP libraries that can accomplish the task. Perhaps the most widely used is the Natural Language Toolkit (NLTK), which provides a powerful suite of text processing libraries. These libraries have the ability to parse sentences given a predefined logic, reduce words to their root (stemming), and determine the part of speech of a word (tagging). I will use the NLTK package to aid in the analysis of the competition dataset.

Extracting Insights from COVID Research

There are several different tasks listed on the Kaggle competition page that are geared towards efficient processing and insight extraction. In this article, I will focus on the most popular task, which aims to answer the following questions about the coronavirus:

- What is known about transmission, incubation, and environmental stability?

- What do we know about natural history, transmission, and diagnostics for the virus?

- What have we learned about infection prevention and control?

The Kaggle page goes into further detail on the specific information that should be extracted from the corpus of publications. This includes:

- Range of incubation periods for the disease in humans (and how this varies across age and health status), as well as length of time that individuals are contagious even after recovery.

- Prevalence of asymptomatic shedding and transmission (particularly in children).

- Seasonality of transmission.

- Physical science of the coronavirus (e.g., charge distribution, adhesion to hydrophilic/phobic surfaces, and environmental survival to inform decontamination efforts for affected areas and provide information about viral shedding).

- Persistence and stability on a multitude of substrates and sources (e.g., nasal discharge, sputum, urine, fecal matter, and blood).

- Persistence of virus on surfaces of different materials (e.g., copper, stainless steel, and plastic).

The goal here is to build a tool in Python that allows us to quickly and efficiently search the publications for information pertaining to these questions.

How to Use NLTK to Solve COVID

For each question we hope to answer, my approach is to reduce the inquiry to a few keywords that we then use to search the abstracts in the dataset. In this way, we can find the most relevant abstracts pertaining to each question. Before we can get to the inquiries though, we first need to examine the metadata.csv file Kaggle provides. It contains information for all publications in the data set, including the abstract for each paper.

The following code imports the metadata.csv file and then extracts all the abstracts that contain the keywords covid, -cov-2, -cov2, and ncov:

df = pd.read_csv('./input/metadata.csv',usecols = ['title','journal','abstract','authors','doi','publish_time','sha']).fillna('no data provided').drop_duplicates(subset = 'title', keep = 'first')

df = df[df['publish_time'].str.contains('2020')]

df['abstract'] = df['abstract'].str.lower() + df['title'].str.lower()

df = pd.concat([df[df['abstract'].str.contains('covid')],

df[df['abstract'].str.contains('-cov-2')],

df[df['abstract'].str.contains('cov2')],

df[df['abstract'].str.contains('ncov')]]).drop_duplicates(subset='title', keep="first")

Now we can build our inquiry tool. The tool is composed of several steps:

- First we use NLTK’s PorterStemmer to obtain the root of each keyword. These roots are then used to search through the abstracts. The abstracts containing the root keywords are stored in rel_df.

- Next, we iterate over this dataframe and rank each abstract based on how many times the keywords are mentioned.

- Then we loop over each sentence in the abstract and store the ones containing the keywords. We also store the publication date, the authors’ names, and links to the paper.

- Finally, we store everything in a dataframe and display it:

def inquiry_tool(question,list_of_search_words):

singles = [PorterStemmer().stem(w) for w in list_of_search_words]

rel_df = df[functools.reduce(lambda a,b : a&b, (df['abstract'].str.contains(s) for s in singles))]

rel_df['score'] = ''

for index,row in rel_df.iterrows():

result = row['abstract'].split()

score = 0

for word in singles:

score += result.count(word)

rel_df.loc[index,'score'] = score * (score/len(result))

rel_df = rel_df.sort_values(by = ['score'], ascending = False).reset_index(drop=True)

df_table = pd.DataFrame(columns = ['pub_date','authors','title','excerpt'])

for index,row in rel_df.iterrows():

pub_sentence = ''

sentences = row['abstract'].split('. ')

for sentence in sentences:

missing = 0

for word in singles:

if word not in sentence:

missing = 1

if missing == 0 and len(sentence) < 1000 and sentence != '':

if sentence[len(sentence)-1] != '.':

sentence += '.'

pub_sentence += '<br><br>'+sentence.capitalize()

if pub_sentence != '':

df_table.loc[index] = [row['publish_time'],

row['authors'].split(', ')[0] + ' et al.',

'<p align="left"><a href="{}">{}</a></p>'.format('https://doi.org/'+row['doi'],row['title']),

'<p fontsize=tiny" align="left">'+pub_sentence+'</p>']

display(HTML('<h3>'+question+'</h3>'))

if df_table.shape[0] < 1:

print('No article fitting the criteria could be located in the literature.')

else:

display(HTML(df_table.head(3).to_html(escape=False,index=False)))

Now that we have built the inquiry tool function, we can make an actual inquiry. I created a dictionary where the keys are the aforementioned questions that we seek to answer, and the values are the keywords corresponding to each question:

inquiry = {

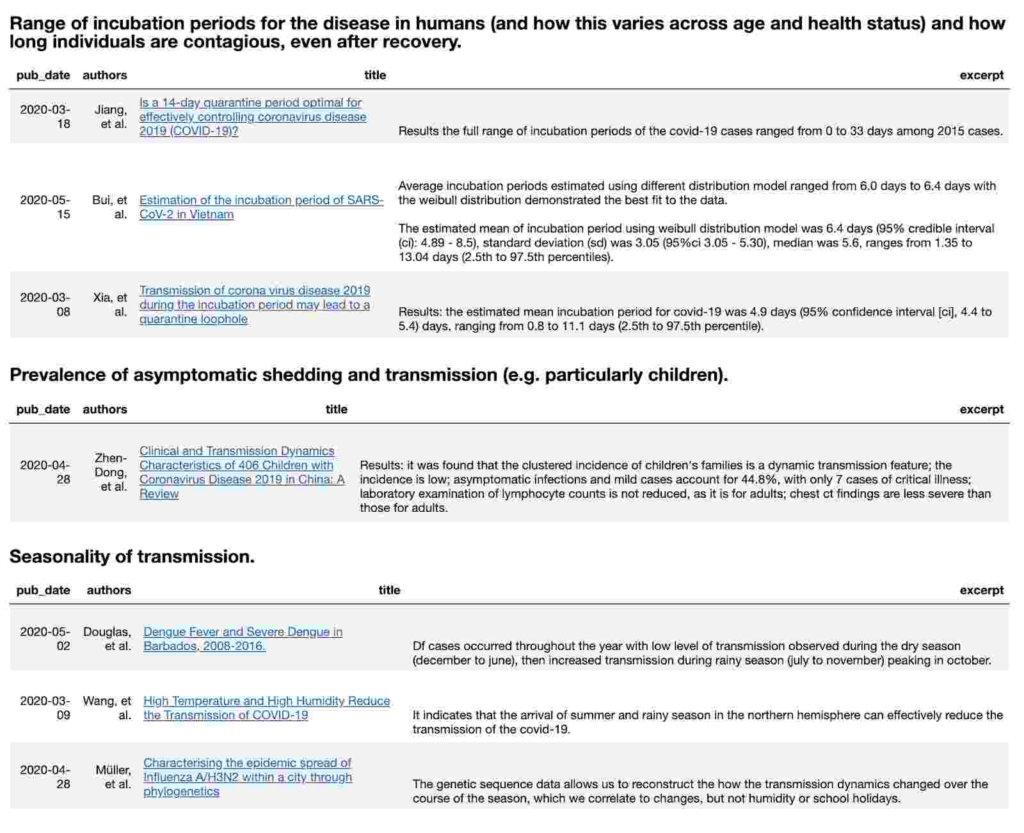

'Range of incubation periods for the disease in humans (and how this varies across age and health status) and how long individuals are contagious, even after recovery.' : ['incubation','period','range'],

'Prevalence of asymptomatic shedding and transmission (e.g. particularly children).' : ['asymptomatic','children','infection', '%'],

'Seasonality of transmission.' : ['seasonal', 'transmission'],

'Physical science of the coronavirus (e.g., charge distribution, adhesion to hydrophilic/phobic surfaces, environmental survival to inform decontamination efforts for affected areas and provide information about viral shedding).' : ['contaminat', 'object'],

'Persistence and stability on a multitude of substrates and sources (e.g., nasal discharge, sputum, urine, fecal matter, blood).' : ['sputum', 'stool', 'blood', 'urine'],

'Persistence of virus on surfaces of different materials (e,g., copper, stainless steel, plastic).' : ['persistence', 'surfaces']

}

for key in inquiry:

inquiry_tool(key,inquiry[key])

This makes it easy to loop through each inquiry. The beginning of the output should look something like this:

Fighting COVID with Python & Machine Learning

COVID-19 continues to be a major problem in many regions of the world. But governments, as well as institutions both public and private are working hard to find solutions to the problem. That work has generated a mountain of data, which poses a unique problem: those best able to assess the data are too busy creating it.

Enter the data scientist, who can apply Python and ML tools to find insights in the data quicker and more efficiently than traditional methods.

In this article, I used Python to build an inquiry tool that searches the COVID-19 Open Research Dataset (CORD-19) and efficiently extracts relevant information pertaining to a set of input inquiries. As the number of publications surrounding COVID-19 continues to increase, it is essential for programmers and data scientists to take the lead in building tools to maximize insight extraction. Feel free to formulate new questions and keywords to further test the capabilities of the tool.

Join the fight against COVID:

- Sign up for a free ActiveState Platform account so you can download the Kaggle COVID Runtime and participate in the COVID-19 Open Research Dataset Challenge.

- All of the code used in this article can be found in my GitLab repository.