Malware is an abbreviation of malicious software, and refers to hacker-created intrusive software designed to steal data, damage computers or compromise computer systems.

Traditional malware vectors of attack include email attachments, fake URLs and even remote desktopping (i.e,. taking advantage of computers that have Remote Desktop Protocol turned on), but a growing number of malware attacks are coming from within the trusted software you deploy, and are more and more likely to either introduce ransomware, extract data, or both.

The biggest growing vector of malware is embedded in the software supply chain because infecting a single popular application can yield hundreds or even thousands of targets when it is deployed across the software vendor’s customer base. Unfortunately, most vendors remain stuck in the past when it comes to addressing this new vector for malware, and continue to focus on network perimeters and software vulnerabilities.

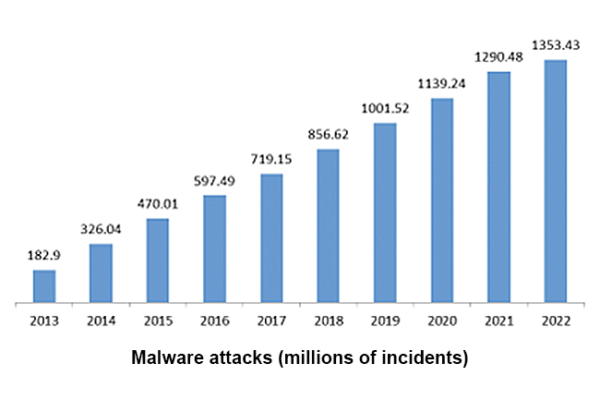

Today’s reality, however, is that there’s a 70% chance a cyber incident will be caused by an organization’s suppliers. The growing gap between older cybersecurity efforts and newer vectors of cyberattack is affording hackers a lot of room to operate, with predictable consequences:

Source = ResearchGate

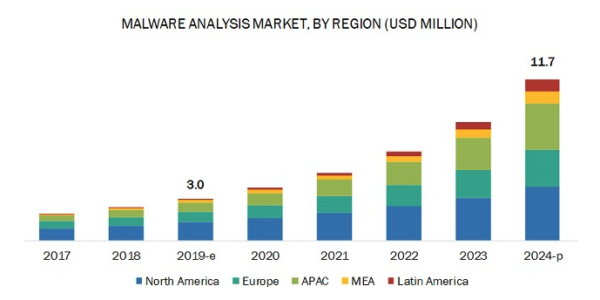

More importantly, the cost of dealing with the fallout from malware is on track to almost quadruple over the past five years:

Source: MarketandMarkets Research

Cybersecurity Best Practices in the Software Supply Chain

Software vendors that employ open source software (which is to say, all of them) are well aware of the risk that malware poses, and have long deployed a number of tactics and tools to help reduce that risk. Unfortunately, most of these solutions have serious drawbacks:

- Automated Code Scanning – imported, third-party code should always be scanned using common threat detection tools such as those for Software Composition Analysis (SCA) and/or Static Application Security Testing (SAST).

- Unfortunately, while scanning works well on non-obfuscated code, hackers typically use compression, encryption and other techniques to hide their malware from these kinds of tools. Scanners that attempt to decompile binaries, essentially reverse-engineering them back into source code have exhibited questionable success.

- Dynamic Analysis – Dynamic Application Security Testing (DAST) tools can be successful at catching obfuscated malware when the application is run.

- However, care must be taken to not only exercise the code thoroughly, but to do so in an isolated, ephemeral network segment so as to avoid infecting the organization.

- Manual Code Review – manually inspecting code is often the only way to verify whether the warnings issued by automated scanning tools are valid.

- Unfortunately, manual review runs into limitations with time and resources, as well as the ability to examine obfuscated code.

- Repos & Firewalling – a typical enterprise strategy is to deploy an artifact repository from which scanned and approved open source packages are made available to developers. Coupled with firewall blocking of open source repositories (as well as other sites that may pose a risk), this method can be effective in limiting risk.

- Unfortunately, the approval process to populate the repository with a newly required package is often far too slow to meet sprint deadlines, forcing developers to “get creative” about working around the firewall.

- Signed Code – rather than obtaining open source from public repositories and applying the practices listed above, some organizations use only open source that has been built and signed by a trusted partner.

- Unfortunately, as Solarwinds infamously proved, the build process of your trusted partner can be compromised prior to the signing stage.

The real drawback here is that too many of these best practices were created to address traditional application security concerns, rather than being designed to specifically address malware in the software supply chain.

As a result, a new set of software supply chain best practices is emerging based on standards like Supply chain Levels for Software Artifacts (SLSA). SLSA takes the position that the only way to eliminate malware from the software supply chain is to build it securely from vetted source code. That means:

- Importing only source code for all the open source dependencies your project requires, and scanning it to flag any concerns.

- Prebuilt dependencies may only be imported if they are accompanied by a Software Attestation that proves it was sourced and built securely.

- Building the source code for every open source dependency (including all transitive dependencies) using a secure, hardened build service.

- Generating a software attestation to prove that each dependency was sourced and built securely, which can then be used by a downstream process to validate its security.

While there are corner cases, such as dependencies for which no source code is available, the good news is that the method of importing and building vetted source code effectively eliminates the risk of including malware within your application.

The bad news is that the time and resource costs can be higher than most organizations are able to afford since the average technology stack includes multiple open source languages and deployment platforms. That means you’ll need to:

- Create and maintain a hardened build environment for each of your deployment platforms.

- Acquire language build expertise for each language in your tech stack.

- Set up and maintain the build script for each dependency to be built in a reproducible manner.

This is why the ActiveState Platform exists: to automatically build your open source dependencies securely from vetted source code so you don’t have to create and maintain build environments, language expertise or build scripts.

Next Steps

Read about how ActiveState implements the SLSA specification to ensure that dependencies are built securely in a reproducible manner.