And yet, in an effort to be first to market, many of the ML solutions in these fields have relegated security to an afterthought. Take ChatGPT for example, which only recently reinstated users’ query history after fixing an issue in an open source library that allowed any user to potentially view the queries of others. A fairly worrying prospect if you were sharing proprietary information with the chatbot.

Despite this software supply chain security issue, ChatGPT has had one of the fastest adoption rates of any commercial service in history, reaching 100 million users in just 2 months after its launch

Obviously, for most users, ChatGPT’s open source security issue didn’t even register. And despite generating misinformation, malinformation and even outright lies, the reward of using ChatGPT was seen as far greater than the risk.

But would you fly in a space shuttle designed by NASA yet built by a random mechanic in their home garage? For some, the opportunity to go into space might outweigh the risks, despite the fact that, short of disassembling it, there’s really no way to verify that everything inside was built to spec. What if the mechanic didn’t use aviation-grade welding equipment? Worse, what if they purposely missed tightening a bolt in order to sabotage your flight?

Passengers would need to trust that the manufacturing process was as rigorous as the design process. The same principle applies to the open source software fueling the ML revolution.

The AI Software Supply Chain Risk

In some respects, open source software design is considered inherently safe because the entire world can scrutinize the source code since it’s not compiled and therefore human readable. However, issues arise when authors that lack a rigorous process compile their code into machine language, aka binaries. Binaries are extremely hard to take apart once assembled, making them a great place to inadvertently or even overtly hide malware, as proven by Solarwinds, Kaseya, and 3CX.

In the context of the Python ecosystem, which underlies the vast majority of ML/AI/data science implementations, pre-compiled binaries are combined with human readable Python code in a bundle called a wheel. The compiled components are usually derived from C++ source code and employed to speed up the processing of the mathematical business logic that would otherwise be too slow if executed by the Python interpreter. Wheels for Python are generally assembled by the community and uploaded to public repositories like the Python Package Index (PyPI). Unfortunately, these publicly available wheels have become an increasingly common way to obfuscate and distribute malware.

Additionally, the software industry as a whole is generally very poor at managing software supply chain risk in traditional software development, let alone the free-for-all that now defines the gold rush to prematurely launch AI apps. The consequences can be disastrous:

- The Solarwinds hack in 2020 exposed to attack:

- 80% of the Fortune 500

- Top 10 US telecoms

- Top 5 US accounting firms

- CISA, FBI, NSA and all 5 branches of the US military

- The Kaseya hack in 2021 spread REvil ransomware to:

- 50 Managed Service Provides (MSPs), and from there to

- 800–1,500 businesses worldwide

- The 3CX hack in March 2023 affected the softphone VOIP system at:

- 600,000 companies worldwide with

- 12 million daily users

And the list continues to grow. Obviously, as an industry, we have learned nothing.

The implications for ML are dire, considering the real-world decisions being made by ML models such as evaluating creditworthiness, detecting cancer or guiding a missile. As ML moves from playground development environments into production, the time has come to address these risks.

Speed and Security: AI Software Supply Chain Security At Scale

The recent call to pause the innovation in AI for six months was met with a resounding “No.” Similarly, any call for a pause to fix our software supply chain is unlikely to gain traction, but that means security-sensitive industries like defense, healthcare, and finance/banking are at a crossroads: they either have to accept an unreasonable amount of risk, or else stifle innovation by not allowing the usage of the latest and greatest ML tools. Given that their competitors (like the vast majority of all organizations that create their own software) depend on open source to build their ML applications, speed and security need to become compatible instead of competitive.

At Cloudera and ActiveState, we strongly believe that security and innovation can coexist. This joint mission is why we have partnered to bring trusted, open-source ML Runtimes to Cloudera Machine Learning (CML). Unlike other ML platforms, which rely solely on insecure public sources like PyPI or Conda Forge for extensibility, Cloudera customers can now enjoy supply chain security across the entire open source Python ecosystem. CML customers can be confident that their AI projects are secure from concept to deployment.

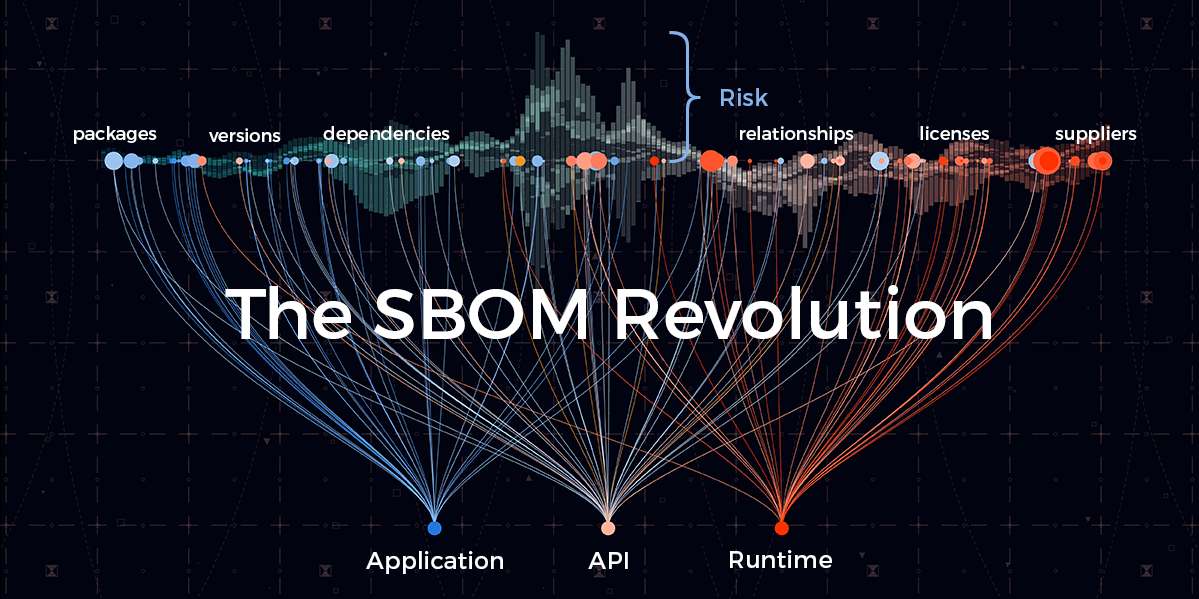

The ActiveState Platform serves as a secure factory, enabling the production of Cloudera ML Runtimes. By automatically building Python from thoroughly vetted PyPI source code, the platform adheres to Supply-chain Levels for Software Artifacts (SLSA) highest standards (Level 4). With this approach, our customers can rely on the ActiveState Platform to manufacture the precise Python components they need, eliminating the need to blindly trust community-built wheels. The platform also provides tools to monitor, maintain and verify the integrity of open source components. ActiveState even offers supporting SBOMs and software attestations that enable compliance with US government regulations.

With Cloudera’s new Powered by Jupyter (PBJ) ML Runtimes, integrating the ActiveState Platform-built Runtimes with CML has never been easier. You can use the ActiveState Platform to build a custom ML Runtime that you can register directly in CML. The days of data scientists needing to pull dangerous prebuilt wheels from PyPi are over, making way for streamlined management, enhanced observability, and a secure software supply chain.

Next Steps

Create a free ActiveState Platform account so you can use it to automatically build an ML Runtime for your project.

Read Similar Stories

The US Government has restricted sale of software to those that can provide an SBOM. Learn what an SBOM is and how to navigate these restrictions.

The US Government requires software vendors to provide self-attestation. Learn what attestations are and how to navigate these restrictions.

Which AI code generator tool is best? Compare the pros and cons of standalone chatbot code generators versus IDE-integrated assistants.