As in most programming languages, there are threads in Python too. Code executes sequentially, meaning that every function waits for the previous function to complete before it can execute. That sounds great in theory, however, it can be a bottleneck for many scenarios in the real world.

For example, let’s consider a web app that displays images of dogs from multiple sources. The user can view and then select as many images as they want to download. In terms of code, it would look something like this:

"` for image in images: download(image) "`

This seems pretty straightforward, right? Users choose a list of images that they download in sequential order. If each image takes about 2 seconds to download and there are 5 images, the wait time approximately is 10 seconds. When an image is downloading, that’s all your program does: just wait for it to download.

But it’s unlikely that’s the only process the user’s computer is running. They might be listening to songs, editing a picture, or playing a game. All of this seems to be happening simultaneously, but in fact the computer is switching rapidly between each task. Basically, every process that a computer executes is split up into chunks that the CPU evaluates, queues, and decides when to process. There are different orders of processing that a CPU can use (but let’s leave that for another article) in order to optimally process each chunk so fast that it looks like the computer is performing multiple tasks simultaneously. However, it actually happens concurrently.

Let’s get back to our dog images example. Now that we know the user’s computer can handle multiple tasks at once, how can we speed up the download? Well, we can tell the CPU that each download of an image can happen concurrently, and that one image does not have to wait for another to complete. This allows each image to be downloaded in a separate “thread.”

A thread is simply a separate flow of execution. Threading is the process of splitting up the main program into multiple threads that a processor can execute concurrently.

Multithreading vs Multiprocessing

By design, Python is a linear language. It does not take advantage of multiple CPU cores or the GPU by default, but it can be tweaked to do so. The first step is to understand the difference between multithreading and multiprocessing. A simple way to do this is to associate I/O-bound tasks with multithreading (e.g., tasks like disk read/writes, API calls and networking that are limited by the I/O subsystem), and associate CPU-bound tasks with multiprocessing (e.g., tasks like image processing or data analytics that are limited by the CPU’s speed).

While I/O tasks are processing, the CPU is sitting idle. Threading makes use of this idle time in order to process other tasks. Keep in mind that threads created from a single process also share the same memory and locks.

Python is not thread-safe, and was originally designed with something called the GIL, or Global Interpreter Lock, that ensures processes are executed serially on a computer’s CPU. On the surface, this means Python programs cannot support multiprocessing. However, since Python was invented, CPUs (and GPUs) have been created that have multiple cores. Today’s parallel programs take advantage of these multiple cores to run multiple processes concurrently:

- With multithreading, different threads use different processors, but each thread still executes serially.

- With multiprocessing, each process gets its own memory and processing power, but the processes cannot communicate with each other.

Now back to the GIL. By taking advantage of modern multi-core processors, programs written in Python can also support multiprocessing and multithreading. However, the GIL will still ensure that only one Python thread gets run at a time. So in summary, when programming in Python:

- Use multithreading when you know the program will be waiting around for some external event (i.e., for I/O-bound tasks).

- Use multiprocessing when your code can safely use multiple cores and manage memory (i.e., for CPU-bound tasks).

Installing Python

If you already have Python installed, you can skip this step. However, for those who haven’t, read on.

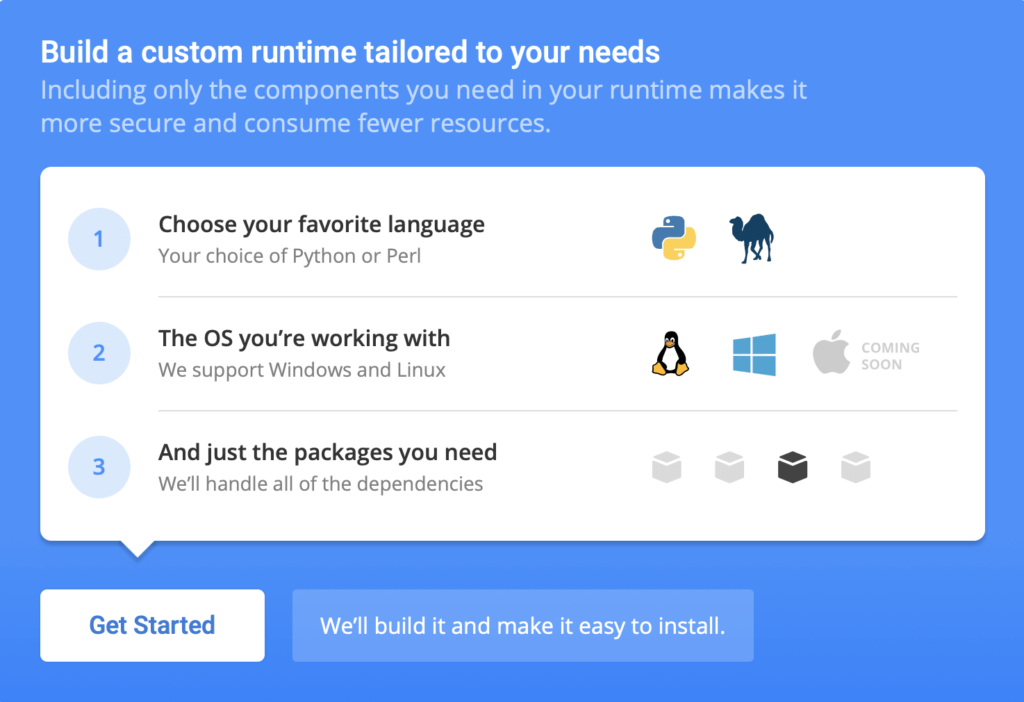

For this tutorial I‘ll be using ActiveState’s Python, which is built from vetted source code and regularly maintained for security clearance. You have two choices:

- Download and install the pre-built Python Threading runtime environment for Win10 or CentOS 7; or

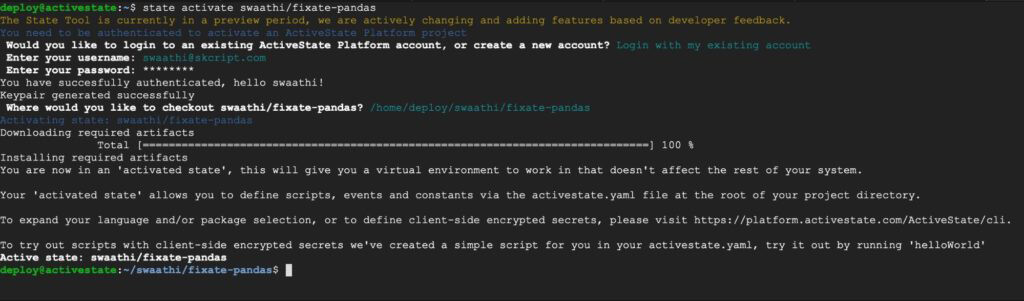

- If you’re on a different OS, you can automatically build your own custom Python runtime with just the packages you’ll need for this project by creating a free ActiveState Platform account, after which you will see the following image:

- Click the Get Started button and choose Python and the OS you’re comfortable working in. Choose the packages you’ll need for this tutorial, including rq.

- Once the runtime builds, you can download the State Tool and use it to install your runtime:

And that’s it. You now have installed Python in a virtual environment.

Now we can get to the fun part – coding!

Using Threads in Python

Let’s go back to our example use case of downloading 5 images off the internet. The original code was:

"` for image in images: download(image) "`

But now that we know we can use threads, let’s use them with the “download” function:

"` import threading for image in images: thread = threading.Thread(target=download, args=(image,)) thread.start() "`

Output:

"` Thread 3: exit Thread 1: exit Thread 2: exit Thread 4: exit "`

When you execute this code, you’ll find the program finishes much sooner! This is because each time the download function is being called, it is not executed in the main process thread. Instead, a new thread is created enabling execution of each download to happen concurrently.

The following line initializes a thread, passes the function to execute and its arguments.

"` thread = threading.Thread(target=download, args=(image,)) "`

To start thread execution, all you have to do is:

"` thread.start() "`

You’ll notice a peculiar thing when you execute the code: it finishes way too fast, and the images don’t get immediately downloaded. In fact, the pictures get downloaded and written to disk long after the program finishes executing. This is because each thread continues processing even though the main process has finished executing. The purpose of the main thread is only to start the thread, not wait for it to finish.

In certain scenarios, however, you’ll want to finish the main process only after all child threads have completed execution. In this case, you’ll want to use the `thread join` function, as follows:

"` import threading threads = [] for image in images: thread = threading.Thread(target=download, args=(image,)) thread.append(thread) thread.start() for thread in threads: thread.join() "`

Output:

"` Thread 1: exit Thread 2: exit Thread 3: exit Thread 4: exit "`

When you execute this code, you’ll notice that the program takes a little bit longer, but it will finish execution only after all images have downloaded. This is because the thread.join() function waits for every thread to “join” back in with the main process.

This is where shared memory is important. The main process has knowledge about every thread that was created, and can wait for it to finish processing.

There’s still one more improvement we can make to the final code. Creating each thread and then looping over them all again just to finish execution seems overly verbose and a little unusual by Python standards (I mean, it literally takes only one line to start an HTTP server in Python).

Fortunately, with Python 3 you now have access to the ThreadPoolExecutor:

"` import concurrent.futures with concurrent.futures.ThreadPoolExecutor() as executor: executor.map(download, images) "`

That’s it! With the ThreadPoolExecutor, you can initialize threads from an array, start all of them, and wait for all of them to join back in with the main process – all in a single line of code!

Common Issues with Threads in Python

While Python threads seem to resolve our use case in a perfect way, when you actually start implementing threads in a real world scenario, you’ll likely run into a myriad of issues. From race conditions to deadlocks, threads can prove to be quite problematic if you don’t consider all the issues that can arise from accessing shared resources.

Race Conditions

When two or more threads access the same shared resource (such as a database) at the same time, weird situations may arise. When both the threads attempt to update/change the same object in a database at the same time, the final value of the object (i.e., which thread wins) is unpredictable. As a result, extra precaution must be taken to ensure that multiple threads do not access shared resources at the same time.

Deadlocks

In other cases, when two or more threads request access to the same shared resource, each thread might lock out the other. This happens when the processor tries to figure out which thread will receive access. Since both of the threads requested access to the resource at exactly the same time, the resource looks busy for both threads. As a result, the resource will stay busy forever, and neither thread will receive access.

Other Issues

There are many issues related to threads, including memory management, retrying of the process when a thread fails, accurate state reporting, and more. Unfortunately, these issues recur in many real world scenarios, and quickly become a waste of developer resources to try and solve them over and over again. Luckily, there’s a better alternative. Let’s take a look!

Queues – A Thread Alternative

When I work with APIs, or any other process that requires a lengthy processing time, I don’t look at threads. Instead, I look at something called queues.

Queues are First In, First Out (FIFO) data structures. They’re an easy way to perform tasks both synchronously and asynchronously in a manner that avoids race conditions, deadlocks and the other issues discussed earlier.

First off, queues are “cheaper” in terms of processing costs. Each time you invoke threads, there’s a management overhead. Memory needs to be assigned and revoked after each thread executes. In many cases, orphan threads can occur when they’re not shut down and cleaned up properly. Instead, orphan threads are left hanging, taking up precious CPU resource time. With queues, the memory cost for storing queue execution is far more economical.

Queues also introduce an easy way to monitor and re-execute processes. I suggest reading “Threads vs Queues” by Omar Elgabry for a deeper look.

To see how you can use queues in our application, let’s use rq (redis queue), which is a very popular queue management system in Python. Redis is an open source, in-memory data structure that allows for quick reads and writes. Think of it as an incredibly fast database that disappears when you turn it off.

With rq, each execution (or “job”) happens by serializing the job ID and all of the required parameters to Redis. Then rq picks up each job from Redis, extracts parameters and performs the function. In the case of job execution failure, rq has memory allocated in Redis about which job failed, along with the resources required to start it over again. As a result, developers can monitor job process and restart failed jobs.

If you haven’t add RQ to your runtime, you can use pip:

"` pip install rq "`

Now let’s modify our use case code to take advantage of queues instead of threads:

"` from redis import Redis from rq import Queue q = Queue(connection=Redis()) q.enqueue(download, images[0]) "`

Before you can execute this code, you’ll need to start the RQ worker. The RQ worker is a background engine that listens for new jobs, reads from Redis, and executes it.

To start the RQ server:

"` $ rq worker "`

Now you can view execution logs right here!

Next Steps

Multithreading, multiprocessing and queues can be a great way to speed up performance. But before implementing any of this, it’s extremely important to understand your needs and the differences between various background processing engines before choosing what works for you.

For most tasks, RQ gets the job done. It’s used extensively in many open source projects and it solves all the mess that comes with threads!

- Download and install the pre-built Python Threading runtime environment for Win10, macOS or CentOS 7, or automatically build your own custom Python runtime on the ActiveState Platform

Related Blogs: