Text will always be a major format for data and it will never be well-organized. According to Phil Karlton’s famous joke, the two hard problems in computing are naming things, cache invalidation, and off-by-one errors. A third problem could be “formatting things”. Most textual data has irregularities in the formatting that make it a pain to process. And much of the work in text processing goes into dealing with formatting issues. These are just the sad facts.

There are decent libraries for handling a great many common text issues. But one thing that comes up again and again is handling semi-formatted, sort-of-tabular text such as log files.

Log files are beguiling because they appear to come with a promise of regularity. Weblogs, for example, are what the server emits, in a strictly defined format. There are some good Python libraries for parsing Weblogs. Unfortunately they are quite slow. When chewing through a few gigabytes of legacy logs on older hardware, especially when engaged in exploratory analysis that means you’ll have to repeat the process many times, speed matters.

I recently faced this problem and I employed regexes. I got rid of one big problem but still needed to gain a lot of speed. I didn’t need to repeat the process but I still needed more speed. I was parsing out a bunch of stuff from legacy weblogs going back over a decade and found it took about an hour to execute the process.

I wanted to pull some quite specific information out of long log lines, and hit upon a regex that did the job nicely in Python:

lineParser = re.compile('([(\d\.)]+) - - \[(.*?)\] "(.*?)" (\d+) (-|\d+) "(.*?)" "(.*?)"')

Yes, I know a regex is not really a parser.

This pulled the half a dozen pieces of information out of the logs I cared about. And it ran about a factor of two faster than the otherwise quite nice pylogparser. This is because the pylogparser is designed to solve much more complex and general problems in production environments.

Still, an hour was a long time when I had to re-run it a bunch of times, and of course C++ is faster than Python. Isn’t it? Or is it?

Writing C++ generally takes longer than Python. And even though I’ve spent over twenty years coding almost exclusively in C++ I’ve been writing mostly Perl and Python over the past few years. And, writing C++, like many high-end skills, deteriorates with time. Plus, it wasn’t fun any more to care about all the things C++ makes you care about. But I turned 117 lines of Python into 143 lines of C++! This was with liberal use of boost libs for regexes and datetime processing, which is where most of the logic of the script lives. The difference was mostly accounted for by curly brackets.

The Python regex translated easily into boost::regex language:

boost::regex lineParser{"([(\\d\\.)]+) - - \\[(.*?)\\] \"(.*?)\" (\\d+) (-|\\d+) \"(.*?)\" \"(.*?)\""};

Having written code generators in C++, keeping track of the extra backslashes was not too hard.

It took a couple of hours to write, but surely it would be worth it in runtime…

The first run took three hours, three times as long as the one hour to run with Python code. (I’m sure Python is slower than Perl. There is very little that is faster than the Perl regex engine when you avoid some well-known pathological cases.)

Boost boosters will point out that there is an alternative to boost::regex that is much faster in benchmarks: boost::xpressive::regex, which gives us:

boost::xpressive::sregex lineParser = boost::xpressive::sregex::compile("([(\\d\\.)]+) - - \\[(.*?)\

\] \"(.*?)\" (\\d+) (-|\\d+) \"(.*?)\" \"(.*?)\"");

Same regex, compiled with a factory method. The result? About 10% faster than the Python version. This is not nearly enough to justify the time invested in it.

Take-Away Lessons

- regex performance can vary wildly across languages.

- For any given real-world case there is no certainty that an inherently faster language will produce much faster regex processing.

- Don’t assume that moving from Python to C++ is faster for regex-heavy text processing tasks.

- Benchmark a minimal test case that uses real-world data first. It’s the only way to be sure.

For a quick way to start using Python for text formatting, download ActivePython. It includes a number of useful libraries, and is free to use in development – making it a great solution for benchmarking test cases and prototyping.

Download ActivePython

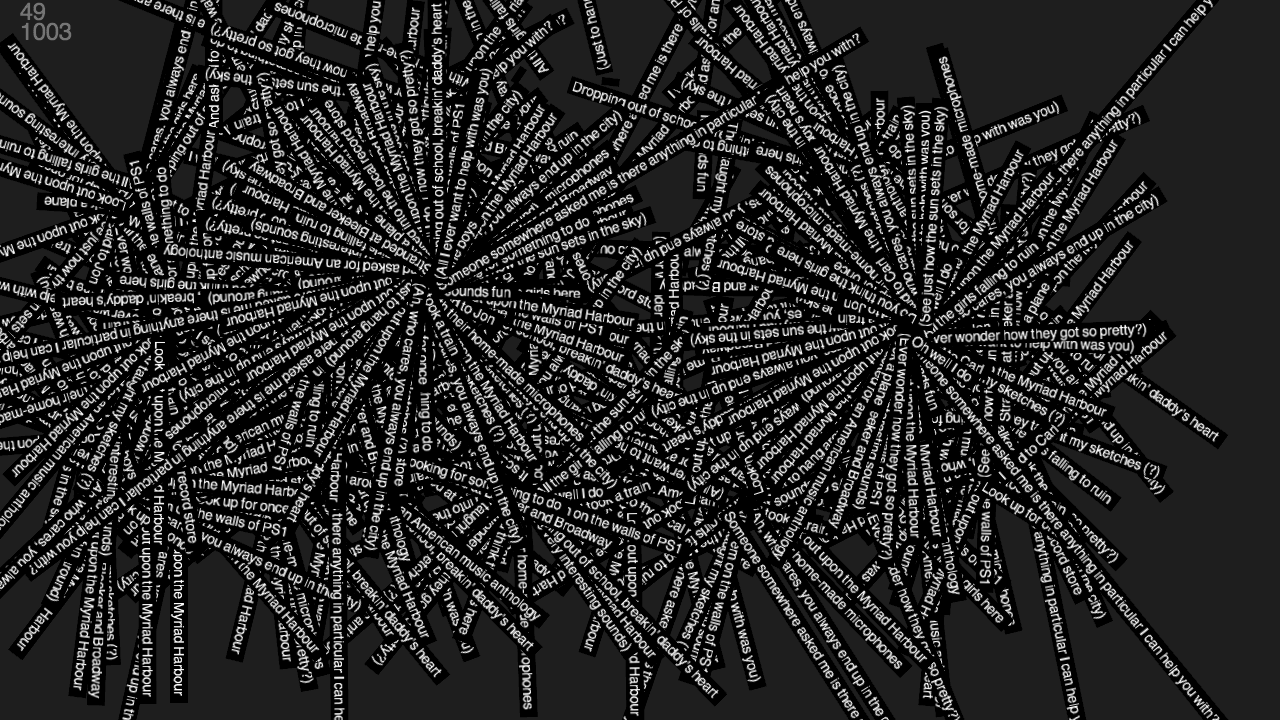

Image source: Jer Thorp at blog.blprnt.com, “Processing Tip: Rendering Large Amounts of Text…Fast!”