Many industries rely on externalities to improve profitability. Most people would recognize the fact that the automobile industry (along with many other manufacturers) externalize air and water pollution through car and factory emissions. In much the same way, however, the software industry also externalizes the risk to your business of being hacked due to their use of non-secure software supply chains. And with the growing reliance on AI to help write software, the problem is only going to get worse.

Anyone who has spent anytime playing around with ChatGPT (the technology behind popular code generators like Microsoft’s Copilot) has undoubtedly encountered a number of problems, including:

- Bias – whether facial recognition racial bias, gender bias against women or other bias.

- Hallucinations – when the AI provides incoherent (and sometimes humorous) replies that are obviously false.

- Copyrighted Material – AI trained on copyrighted material can sometimes spit out results that are overtly similar to that copyrighted material.

These kinds of “errors” are problematic in and of themselves, since they are typically a symptom of the data on which the AI systems have been trained. Any new system trained on the same data will perpetuate the same biases, hallucinations and copyright infringements.

What may be worse, however, is the fact that AI training data is now being generated by AI systems. In other words, AI is being used to generate training data for AI. Whether this is done on purpose using synthetic data generators, or by accident (such as when Mechanical Turk or Fiverr workers use ChatGPT to generate answers for them), AI-generated data can “poison” future AI systems by causing their output quality to degenerate.

Large Language Models (LLMs like ChatGPT) are all the rage, but to become more powerful, they must be trained on more and more data. When trained on AI-generated data, however, the quality and precision of responses decrease because the model is increasingly converging on less-varied data. Garbage in, garbage out. The phenomena has been likened to the AI going mad, or what researchers term Model Autophagy Disorder (MAD) after just five iterations of being trained on AI-generated data.

This kind of self-replicating pollution (AI being used to generate more data, which is used to train more AI systems, that are then used to generate more AI-generated data) increases over time. Where even nuclear radiation decreases slowly over decades and centuries, AI-generated data is on track to sabotage the environment in which AI is being grown.

While definitely worrying, the data pollution problem only affects AI systems. Potentially worse is another AI externality that affects all software applications.

AI-Generated Code Security Threat

The AI goldrush following the phenomenal success of ChatGPT opened a Pandora’s Box that nobody has come to grips with as yet. After launching ChatGPT capabilities in their Bing search engine Microsoft CEO Nadella publicly disparaged Google’s cautious approach to releasing their AI-powered solutions. The message was clear: jump on the AI bandwagon or be left behind. The resulting arms race has forced companies to embrace AI in order to bring AI products to market quicker, before they can be fully vetted.

What does “embrace AI” mean for coders? Unlike the early years of open source when enterprise adoption was extremely slow, AI uptake has been meteoric. By Microsoft’s estimates, GitHub Copilot, which employs ChatGPT to auto-generate code, is responsible for between 46% (all languages) and 64% (Java) of all code on GitHub. That’s an incredible figure given Copilot launched less than two years ago in October 2021.

Unfortunately, studies have shown that AI-generated code leaves much to be desired when it comes to security. For example:

- A Stanford University team found developers that make use of AI tools are more likely to add security vulnerabilities to the software they’re creating. The problem lies in the fact that while the generated code appears superficially correct, it invokes compromised software or makes use of insecure configurations. More worrying is the fact that only 3% of AI-assisted developers actually wrote secure code, while the rest believed they had written secure code.

- A Université du Québec team used ChatGPT to generate 21 programs using five different programming languages: C, C++, Python, html and Java. After investigating the results, only 5 of the 21 programs were deemed secure.

- A New York University study found that 40% of AI-generated code is either buggy or vulnerable to attack.

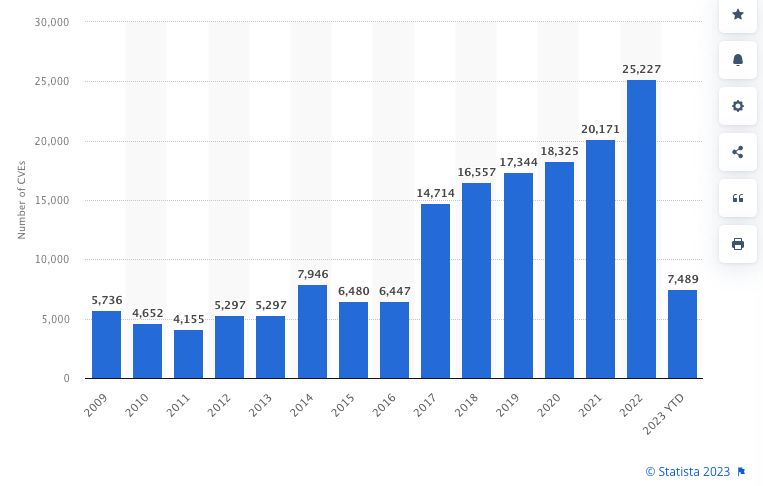

The productivity boost developers gain by using AI assistants is fantastic, but the tradeoff is an increase in buggy/unsecure code at a time when the frequency of vulnerable open source code is accelerating:

Source: Number of common IT security vulnerabilities and exposures (CVEs) worldwide from 2009 to 2023 YTD

The vulnerability spike in 2022 is especially striking given the wide adoption of AI assistants in 2021, and is likely a sign of things to come. As more and more vulnerable libraries get incorporated in more AI applications which are being rushed to market, the greater the security problem is going to become. Containing this growing wildfire is imperative if the software industry is to secure their software supply chains against the growing cyberthreat.

Conclusions

Unquestionably, AI-based code writing tools increase productivity but developers must remain aware of the potential bugs and security issues being generated. Unfortunately, as pressure mounts to release faster and more frequently, too much software tends to get shipped without undergoing extensive security checks. The integration of AI assistants into the coding process will only exacerbate this issue.

The good news is that best practices for secure software development haven’t changed, despite the addition of AI:

- Start Secure – ensure that your programming language and open source packages are up to date, and have been built/sourced securely. ActiveState can help.

- Understand The Tooling – no matter what tooling you prefer to code with, understanding it will make you a better programmer. The same is true for any AI assistants you make use of: understand its limitations and pitfalls, and compensate accordingly. For example, ChatGPT code is good for some use cases, but not others.

- Adopt Standards – there are numerous standards and frameworks that can help ensure the software you create is secure, including modern frameworks like SLSA that can help ensure your software supply chain remains secure despite the incorporation of AI-generated code. Learn more about SLSA.

Next Steps:

Starting secure is half the battle. Sign up for a free ActiveState account and see how easy it is to generate a secure runtime environment for your project.