The Task: How to Write Good Code using AI

What does it mean to write good code? While several authors have discussed their thoughts about it, day-to-day programming mostly consists of debugging and tracking existing code, which is almost as important as writing new code.

The good news is that the new AI-ssistants (yeah, I made up that word) cover a number of different use cases, including:

- Writing new code based on not-so-simple descriptions.

- Explaining existing code (meaning code that has already been written) in simple words.

- Testing existing code.

- Checking for defects in existing code.

- Documenting existing code.

- Translating code from one programming language to another.

Note that not all AI code generators in this comparison can do all of the tasks in this list. Depending on the scope of your work, you may need to adopt more than one tool. In addition, there are other non-technical criteria that you should consider when evaluating tools, such as:

- Cost

- Security

- Adoption barriers

- Community support

In general, AI code generators tools come in two types: chatbots and code completion tools. Let’s take a look at each in turn.

Text-to-Code AI Assistants

DeepMind’s research lab produced AlphaCodeto solve the problem of trying to generate an entire program “starting from a long natural language task description.” Although it’s not yet available to be used directly (as of the time of writing), they’ve provided a page of competitive programming problems that have been trained. From those, I have chosen the book reader problem, which is tagged to be solved using graphs. The problem statement is as follows:

You are given a book with n chapters.

Each chapter has a specified list of other chapters that need to be understood in order to understand this chapter. To understand a chapter, you must read it after you understand every chapter on its required list.

Currently you don't understand any of the chapters. You are going to read the book from the beginning till the end repeatedly until you understand the whole book. Note that if you read a chapter at a moment when you don't understand some of the required chapters, you don't understand this chapter.

Determine how many times you will read the book to understand every chapter, or determine that you will never understand every chapter no matter how many times you read the book.

Input

Each test contains multiple test cases. The first line contains the number of test cases t (1 ≤ t ≤ 2⋅10^4).

The first line of each test case contains a single integer n (1 ≤ n ≤ 2⋅10^5) — number of chapters.

Then n lines follow. The i-th line begins with an integer k_i (0 ≤ k_i ≤ n-1) — number of chapters required to understand the i-th chapter. Then k_i integers a_{i,1}, a_{i,2}, ..., a_{i, k_i} (1 ≤ a_{i, j} ≤ n, a_{i, j} ≠ i, a_{i, j} ≠ a_{i, l} for j ≠ l) follow — the chapters required to understand the i-th chapter.

It is guaranteed that the sum of n and sum of k_i over all testcases do not exceed 2⋅10^5.

Output

For each test case, if the entire book can be understood, print how many times you will read it, otherwise print -1.

The solution given by the model is a simple Python function:

from sys import stdin, stdout def deepmind_solution(): input = stdin.readline print = stdout.write for _ in range(int(input())): n = int(input()) a = [list(map(int, input().split())) for i in range(n)] ans = 0 k = 0 b = [0] * n while k < n: l = 0 for i in range(n): if b[i] == 0: c = 0 for j in range(1, a[i][0] + 1): if b[a[i][j] - 1] == 1: c += 1 if c == a[i][0]: b[i] = 1 l += 1 if l == 0: ans = -1 break else: ans += 1 k += l print(str(ans) + '\n')

This is the baseline code that we will use to test the text-to-code tools in our list. Using the same problem statement, we will compare the solutions returned by the other tools, and sometimes enhance the code with comments/tests/translations.

1 — ChatGPT

ChatGPT has democratized access to Artificial Intelligence (AI). Although developers and even the general public had access to models that could analyze and create images, chat with people, and even interactively learn(among other things) before, none of that was close to what non-developers have discovered through the power of Large Language Models (LLMs) like ChatGPT. Now that a simple-to-use chatbot can not only instantly provide useful information but also pass the Turing test, we are all aware that the singularity is closer than ever.

Singularities aside, I provide ChatGPT with the same prompt from our AlphaCode example and get the following working solution:

from collections import deque def understand_book(t, test_cases): for i in range(t): n = test_cases[i][0] req = [[] for _ in range(n)] for j in range(n): k, *lst = test_cases[i][j+1] for l in lst: req[l-1].append(j) indegree = [len(x) for x in req] queue = deque([x for x in range(n) if indegree[x] == 0]) cnt = 0 while queue: for _ in range(len(queue)): node = queue.popleft() cnt += 1 for neighbor in req[node]: indegree[neighbor] -= 1 if indegree[neighbor] == 0: queue.append(neighbor) if cnt != n: print(-1) else: print(len(queue))

But that wasn’t the only thing the bot produced. It also included an explanation of the algorithm:

The function takes two parameters: t, the number of test cases, and test_cases, a list of test cases, where each test case is a list of integers representing the number of chapters and their required chapters. The function first initializes an empty list req, where req[i] is a list of the chapters that need to be understood in order to understand chapter i. It then populates req based on the input. The function also initializes a list indegree, where indegree[i] is the number of chapters that require chapter i in order to be understood. It then populates indegree based on req. The function uses a queue queue to keep track of chapters that can currently be read. It first adds all chapters with an indegree of 0 to the queue. It also initializes a counter cnt to keep track of the number of chapters read. The function then enters a loop that removes a chapter from queue, increments cnt, and updates the indegrees of its neighbors. If the indegree of a neighbor becomes 0 as a result of this update, the neighbor is added to queue. The loop continues until queue is empty, meaning that no more chapters can currently be read. At this point, if cnt is equal to n, the function prints the number of times the book needs to be read (which is the length of queue), otherwise it prints -1 to indicate that the entire book cannot be understood no matter how many times it is read.

In fact, this is a good, implementation-based explanation that could serve as documentation for the function. As expected, you can take it further and ask for a natural language explanation:

The function uses a graph-based approach to solve the problem. Each chapter is represented as a node in the graph, and an edge is drawn from chapter u to chapter v if v is required to understand u. A chapter can be read only when all the chapters required to understand it have already been read. Therefore, the problem can be reduced to finding the minimum number of times the entire graph can be traversed (i.e., the entire book can be read) such that all nodes can be reached. The function first loops through each test case. For each test case, it initializes an empty list req, where req[i] is a list of the chapters that need to be understood in order to understand chapter i. It then populates req based on the input. For example, if chapter 1 requires chapters 2 and 3 to be understood, then req[0] = [1, 2]. The function also initializes a list indegree, where indegree[i] is the number of chapters that require chapter i in order to be understood. It then populates indegree based on req. For example, if chapter 2 and chapter 3 both require chapter 1 to be understood, then indegree[0] = 2. The function uses a queue queue to keep track of chapters that can currently be read. It first adds all chapters with an indegree of 0 to the queue. This is because a chapter can

ChatGPT can also compare its own solution to DeepMind’s solution:

Both solutions use a similar approach to solve the problem: processing a list of lists and determining the minimum number of iterations needed to process all elements in the list. However, they use different algorithms to achieve this. The first solution uses a greedy algorithm that iteratively processes elements that have all their dependencies already processed. It uses a while loop to iterate until all elements have been processed. For each iteration, it checks every element in the list and marks as processed any element that has all its dependencies already processed. If no elements were processed during the current iteration, the code stops and outputs -1. The second solution uses a topological sorting algorithm to process the elements in the list of lists. It builds a graph where each node represents an element in the list, and each edge represents a dependency between two elements. It then performs a topological sort of the graph to determine the minimum number of iterations needed to process all elements. The first solution is simpler and easier to understand, but it may be less efficient for large input sizes since it processes each element in the list multiple times. The second solution is more complex but may be more efficient for large input sizes since it uses a topological sort algorithm, which has a time complexity of O(V+E). Overall, both solutions seem correct and efficient for small to medium-sized inputs. However, the second solution may be a better choice for large input sizes.

ChatGPT checks all of the boxes in our functional test. It is able to write, test, and check code.

Finally, we’ll ask ChatGPT to translate it to Java and check it with a test case. As you can see, it’s a piece of cake for ChatGPT:

import java.util.*;

public class Solution {

public static void understandBook(int t, int[][] testCases) {

for (int i = 0; i < t; i++) {

int n = testCases[i][0];

List<List<Integer>> req = new ArrayList<>();

for (int j = 0; j < n; j++) {

int k = testCases[i][j+1][0];

List<Integer> lst = new ArrayList<>();

for (int l = 1; l <= k; l++) {

lst.add(testCases[i][j+1][l]-1);

}

req.add(lst);

}

int[] indegree = new int[n];

for (int j = 0; j < n; j++) {

for (int neighbor : req.get(j)) {

indegree[neighbor]++;

}

}

Queue<Integer> queue = new LinkedList<>();

for (int j = 0; j < n; j++) {

if (indegree[j] == 0) {

queue.offer(j);

}

}

int cnt = 0;

while (!queue.isEmpty()) {

int size = queue.size();

for (int j = 0; j < size; j++) {

int node = queue.poll();

cnt++;

for (int neighbor : req.get(node)) {

indegree[neighbor]--;

if (indegree[neighbor] == 0) {

queue.offer(neighbor);

}

}

}

}

if (cnt != n) {

System.out.println("-1");

} else {

System.out.println(queue.size());

}

}

}

}

And here are the test results:

import org.junit.Test;

import static org.junit.Assert.*;

public class UnderstandBookTest {

@Test

public void testUnderstandBook() {

int t = 2;

int[][] testCases = {

{3, 2, 1, 2, 3},

{3, 2, 3, 1, 2}

};

UnderstandBook understandBook = new UnderstandBook();

int[] expected = {-1, 1};

for (int i = 0; i < t; i++) {

int result = understandBook.understandBook(t, testCases)[i];

assertEquals(expected[i], result);

}

}

}

Pros:

- Fast and simple to use

- Cost effective since it uses a SaaS model where the unit of measurement is a token (which can be understood as a piece of a word), with 1000 tokens equivalent to about 750 words. You also get access to several other services, including InstructGPT and Codex.

- You can also use ChatGPT within Visual Studio Code with the RubberDuck extension.

Cons:

- The main concern is with the security of the information, since any data provided in a prompt or result can be used to train the model. Recently, there have been stories about a certain large company’s reckless behaviour due to their failure to understand the terms of service, which resulted in the leakage of sensitive company data that was integrated by default into the model’s training.

2 — ColossalChat

There are several other LLMs available on the market (and more on the way), but it’s hard to come close to ChatGPT’s performance. Perhaps the closest is ColossalChat, which is an open-source clone that has been trained using the Reinforcement Learning from Human Feedback (RLHF) pipeline. It can achieve comparable results to ChatGPT and GPT-3.5 on certain tasks.

ColossalChat is part of the Colossal-AI collection of user-friendly tools that can kickstart distributed training and inference in just a few lines of code. The available models include:

- AlphaFold for biomedicine

- Stable Diffusion for image processing

In our test, the code generated for the same AlphaCode problem we used earlier looks legitimate at first glance:

def aphyrhon(n):

# Initialize visited set and current_chapters

visited = {}

current_chapters = []

# Iterate over all possible combinations of chapters

for i in range(n + 1):

# Add current chapter to current_chapters

current_chapters.append(i)

# Check whether current chapter is already visited

if i in visited:

continue

# Mark current chapter as visited

visited[i] = True

# Recursively check remaining unvisited chapters

for j in range(i+1, n+1):

if j not in visited:

aphyron(j)

return len(current_chapters) - 1 # Returning length of current_chapters since we have to visit them all

But the verdict from ChatGPT is not so good:

No, the solution you provided is not a valid solution for the same problem. The code is missing some critical elements that are necessary to solve the problem. The code you provided appears to use a recursive function called aphyrhon to visit all the chapters in the book. However, the code does not define the aphyrhon function or provide any details about how it works. Therefore, it is not clear how the code is intended to work. Additionally, the code does not use any data structures to represent the dependencies between chapters. In the original problem, each chapter has a list of dependencies that must be completed before the chapter can be read. The code you provided does not account for these dependencies, so it cannot accurately determine the minimum number of iterations needed to complete all chapters. Finally, the code does not output the correct result. The len(current_chapters) - 1 expression is intended to return the number of chapters that were visited, but this is not the same as the minimum number of iterations needed to complete all chapters. The correct output for the problem is the minimum number of iterations needed to complete all chapters, not the number of chapters visited.

Therefore, the code you provided is not a valid solution for the problem.

Pros:

- An open source solution that’s capable of understanding such a complex problem description is an amazing achievement.

- ColossalChat can sustain text conversations in natural language

Cons:

- Code capabilities seem to be outdated.

- Availability is one of the greatest concerns with the demo URL being periodically unreachable

- Further analysis (for example, trying to test and explain the code) was not possible once the solution was provided.

3 — Bard

Bard, Google’s attempt to compete with ChatGPT, is the new kid on the block. Bard had a rough initial public presentation (which caused quite a fiasco), but a recent blog postfrom Google claims that the experiment can now check all our boxes. Access to Bard is still closed to early adopters, and the costs, terms of security, and related criteria are still not completely clear.

IDE Code Assistant Plugins

Another, more developer-focused set of tools for improving code-related productivity are the code assistants offered as IDE plugins. This approach lets developers assign operational tasks to the assistant (which is able to deliver based on the editor’s context).

4 — Microsoft Copilot

The gold standard is set by Microsoft’s Copilot plugin for Neovim, JetBrains, Visual Studio, and the Visual Studio Code IDEs. You can use it in Codium (which is a telemetry-free version of VSCode) and similar alternatives with a single line of JavaScript.

Its capabilities are based on a simple code suggestion flow, which includes the following:

- Convert comments to code.

- Create unit tests.

- Create SQL queries.

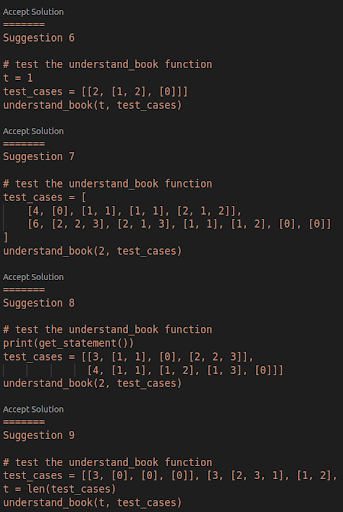

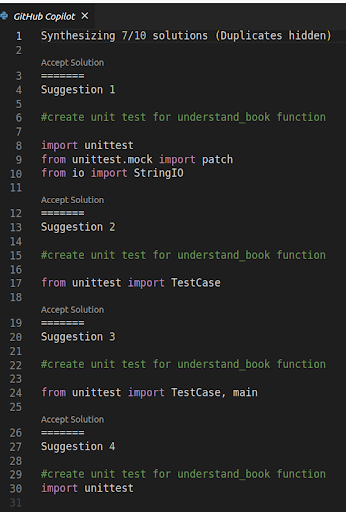

The plugin uses OpenAI’s Codex model to generate the suggestions in real time. For our review, we asked it to test the solution provided by ChatGPT. Here are the results:

However, explicit unit testing is not covered (even if you ask explicitly):

Pros:

- Copilot comes with a free 30-day trial and offers subscription plans for individuals and organizations.

- Explicit implementation of simple tasks (similar to what has been achieved on text editors like Google Docs and Office 365).

- Enhanced auto-completion of code. The following example shows the results of the completion for the function specified as a comment in Python:

# a function named code_whiz to read csv files line by line and log the data if the line contains a column of int type

def code_whiz():

with open('data.csv') as csv_file:

csv_reader = csv.reader(csv_file, delimiter=',')

for row in csv_reader:

if row[1].isdigit():

logging.info(row)

Cons:

- Copilot gathers user engagement data and code snippet data, but retention of that data differs for individuals and businesses. Check Copilot’s FAQ for more information.

- Text explanations from source functions are not completed (these may be out of the assistant’s scope).

5 — AWS CodeWhisperer

Amazon has recently released an extension for several IDEs to provide “AI-ssistant” tools. The CodeWhisperer service consists of a plugin that works with:

- AWS Lambda function editor

- IntelliJ Idea

- PyCharm

- AWS Cloud9 (a cloud-based IDE)

- Visual Studio Code (or VSCodium without any modification)

Testing CodeWhisperer’s code writing capabilities is kind of limited, but some simple tasks can be accelerated easily with explicit explanations:

# a function named code_whiz to read csv files line by line and log the data if the line contains a column of int type def code_whiz(filename): with open(filename, "r") as f: reader = csv.reader(f) for row in reader: if row[0].isdigit(): logging.info(row)

In this case, the function code was generated from the comment line. AWS CodeWhisperer is especially suitable for generating code from the AWS SDK APIs (like boto3). With its free tier, the plugin also offers up to 50 security scans per month (which can detect bad practices such as credentials logging, among others).

Pros:

- Shows when a suggestion is highlighted as being distributed under an open-source license.

- Free to use. However, the documentation states, “beginning in May 2023, AWS may begin to charge you for using CodeWhisperer, depending on how you use it.”

Cons:

- Collects both usage telemetry and content data at the free tier. At the professional tier, it collects only usage telemetry.

- However, you can also opt out of sharing your client-side telemetry by modifying the default configuration.

- Niche product oriented towards promoting the AWS SDK toolset.

- It would be interesting to test its ability to create code that uses SDKs from other public cloud providers, like Google or Microsoft.

6 — CodeGeeX

CodeGeeX, which was created in China, is a multilingual code generator. It can be used to create new code from explicit natural language descriptions. Below, we will compare its suggested implementation with the previous one from AWS CodeWhisperer:

# a function named code_whiz to read csv files line by line and log the data if the line contains a column of int type

def ichizo(filename):

with open(filename) as f:

for line in csv.reader(f):

if int(line[-1]) == 1:

print line

ichizo('ichizo.csv')

As you can see from the print message, the code seems to be in the style of Python 2. Also, some conditions were not met, such as the function name and logging usage (instead of print). More complicated descriptions (like the previous AlphaCode example) are simply out of scope. But the good news is that CodeGeeX is trained to document code. The results from the ChatGPT solution for the AlphaCode competitive problem are as follows:

from collections import deque def understand_book(t, test_cases): # loop through the test cases for i in range(t): # get the number of nodes and edges n = test_cases[i][0] # create an empty list for the edges req = [[] for _ in range(n)] # loop through the edges for j in range(n): # get the number of nodes and edges k, *lst = test_cases[i][j+1] # loop through the nodes for l in lst: # add the edge to the list req[l-1].append(j) # create a list of indegree of each node indegree = [len(x) for x in req] # create a queue of nodes with indegree 0 queue = deque([x for x in range(n) if indegree[x] == 0]) # create a counter to keep track of the number of nodes cnt = 0 # loop through the queue while queue: # loop through the nodes for _ in range(len(queue)): # get the node node = queue.popleft() # increment the counter cnt += 1 # loop through the edges for neighbor in req[node]: # decrement the indegree of the neighbor indegree[neighbor] -= 1 # check if the neighbor has indegree 0 if indegree[neighbor] == 0: # add the neighbor to the queue queue.append(neighbor) # check if the counter is equal to the number of nodes if cnt!= n: # print -1 if it is not print(-1) # otherwise print the number of nodes in the queue else: print(len(queue))

It’s clear that a consistent natural-language description of the global solution is beyond its limits, but the line-by-line documentation might be helpful for understanding the code.

CodeGeeX can also translate the function to other languages (like Java). In the image below, you can compare ChatGPT’s translation of the original ChatGPT Python code into Java (left) with CodeGeeX’s translation of the same (right):

ChatGPT explains the difference between the two implementations:

No, the second code is not equivalent to the first code. Although both codes aim to determine the minimum number of pages needed to understand a book, they use different algorithms to solve the problem. The first code uses a graph algorithm that creates a directed graph where each chapter is a node and each requirement is an edge pointing to the dependent chapter. It then computes the in-degree of each node, which represents the number of prerequisites that must be satisfied before the chapter can be read. The algorithm then uses a queue to perform a topological sort of the graph, starting with the nodes with zero in-degree. At each iteration, it updates the in-degree of the neighboring nodes and adds them to the queue if their in-degree becomes zero. The algorithm continues until all nodes have been processed, and it returns the minimum number of pages needed to understand the book. The second code uses a simpler algorithm that computes the total number of pages for each subject and then treats each subject as a separate problem. For each subject with non-zero pages, the algorithm creates a graph where each page is a node and the requirements are edges pointing to the dependent pages. It then uses a queue to perform a breadth-first search starting with the pages with zero requirements. At each iteration, it updates the in-degree of the neighboring nodes and adds them to the queue if their in-degree becomes one. The algorithm continues until all nodes have been processed, and it returns 1 if all subjects have been understood, and 0 otherwise.

It’s curious that the translations differ in approach when the tools were given the same original source code.

Pros:

- Free to use

- CodeGeeX is open source software, including its models and weights! By customizing the model and deploying on premise organizations can get a tailored solution without worrying about privacy concerns.

Cons:

- Outdated Python 2 model

- Complex descriptions are not supported

7 — TabNine

Another contender in the IDE “AI-ssistant” category is TabNine, which provides auto-completion of current lines and entire functions in 25 languages/frameworks for the most popular IDEs on the market, including:

- Visual Studio Code (VScode)

- Emacs

- Vim

- Jupyter Notebooks

- Sublime

- Eclipse

New code can be added using suggestions from the comments:

# a function named code_whiz to read csv files line by line and log the data if the line contains a column of int type def code_whiz(filename): with open(filename, "r") as f: reader = csv.reader(f) for row in reader: logging.info(row)

If you compare the results to those of CodeWhisperer and CodeGeeX, you will notice similarities to the AWS product. However, the code lacks some of the validations defined in the comments. TabNine does a decent job of trying to suggest one-liner completions, but it feels a little limited for a product that starts at $15/month.

Pros:

- Guarantees that your code always remains private

- Only uses open source code that features permissive licenses (MIT, Apache 2.0, BSD-2-Clause, and BSD-3-Clause).

- Lets you opt out of the integrated telemetry measurement.

Cons:

- Expensive for a limited product

8 — Codeium

Codeium, a free-forever “AI-ssistant” for individuals that offers plugins for multiple IDEs, including:

- Emacs

- Web-based IDEs that use the Chrome extension (i.e., Jupyter Notebooks and AWS Code9).

It can auto-complete code (based on the current line of the editor), as well as complete function generation for more than 20 programming languages. Here’s an example from our test (using Python):

# a function named code_whiz to read csv files line by line and log the data if the line contains a column of int type def code_whiz(filename): with open(filename) as f: reader = csv.reader(f) for row in reader: if row[0].isdigit(): logging.info(row)

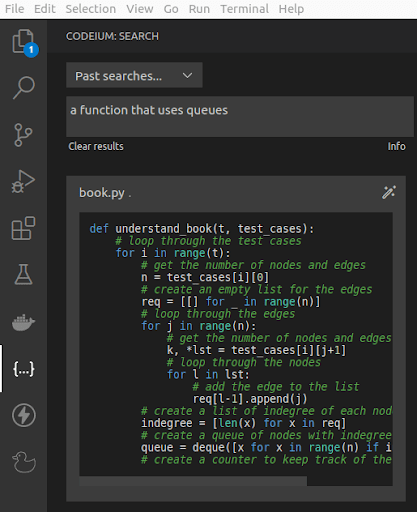

Its most interesting (experimental) capability is the ability to search project code. In this case, we searched for a specific function in Python that was generated using ChatGPT:

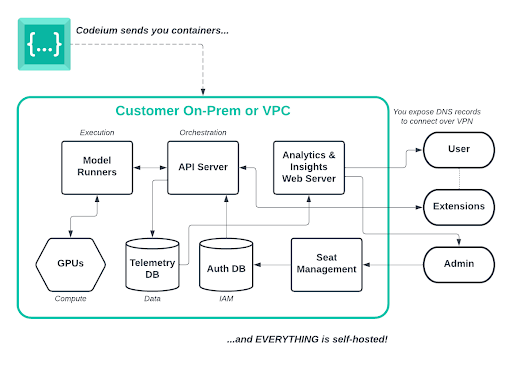

This might be interesting for exploring the code and better understanding code dependencies. The enterprise plan offers a self-hosted VPC deployment that guarantees local execution of the customized models:

Codeium (Image Source: https://codeium.com/enterprise)

Codeium’s chat functionality is comparable to ChatGPT’s in that it responds to the original AlphaCode problem description with an explanation of the solution:

This problem can be solved using topological sorting. First, we need to build a directed graph where each chapter is a node and there is a directed edge from chapter i to chapter j if chapter j is required to understand chapter i. Then, we can perform a topological sort on this graph to get a linear ordering of the chapters such that if chapter i comes before chapter j in the ordering, then all the required chapters for chapter i also come before chapter j. If the graph has a cycle, then it is impossible to understand every chapter no matter how many times we read the book, since we will never be able to understand the chapters in the cycle. Otherwise, we can read the book in the order given by the topological sort. The number of times we need to read the book is equal to the length of the longest path in the graph, which can be computed using dynamic programming.

And, of course, a proposed solution in Python:

from collections import deque def topological_sort(graph, in_degrees): # Initialize queue with nodes that have no incoming edges q = deque([i for i, deg in enumerate(in_degrees) if deg == 0]) order = [] while q: node = q.popleft() order.append(node) # Decrement in-degree of each neighbor and add to queue if in-degree becomes 0 for neighbor in graph[node]: in_degrees[neighbor] -= 1 if in_degrees[neighbor] == 0: q.append(neighbor) # If there are still nodes with positive in-degree, there is a cycle and topological sort is not possible if any(in_degrees): return None else: return order def read_book(): t = int(input()) for _ in range(t): n = int(input()) # Build graph and in-degree array graph = [[] for _ in range(n)] in_degrees = [0] * n for i in range(n): dependencies = list(map(int, input().split()))[1:] for dep in dependencies: graph[dep - 1].append(i) in_degrees[i] += 1 # Perform topological sort order = topological_sort(graph, in_degrees) if order is None: print(-1) continue # Compute longest path using dynamic programming dp = [0] * n for node in order: for neighbor in graph[node]: dp[neighbor] = max(dp[neighbor], dp[node] + 1) print(max(dp) + 1) read_book()

ChatGPT’s analysis of the solution is quite interesting:

Yes, the code is a valid solution to the problem. The topological_sort function correctly performs a topological sort on the graph to detect if there is a cycle, which would mean that it is impossible to understand every chapter no matter how many times the book is read. If the graph is acyclic, the order list returned by topological_sort correctly represents the order in which the chapters should be read, and the dp array is correctly used to compute the length of the longest path in the graph. The code then outputs the length of the longest path plus one as the number of times the book should be read to understand every chapter. Overall, the code is well-organized and easy to understand.

And when asked to compare the solution to the one provided by ChatGPT, it concludes that Codeium’s analysis is pretty good:

This solution also implements topological sorting, but in a slightly different way. Instead of using a separate function for topological sorting, it directly builds the graph and in-degree array, and then performs the sort using a queue in the main function. The approach to finding the answer after the sort is also slightly different, as it uses a counter to keep track of the number of nodes processed instead of computing the longest path using dynamic programming. Overall, both solutions should be correct and have the same time complexity of O(n + m), where n is the number of chapters and m is the total number of required chapters across all chapters. The main difference is in the implementation details and style.

Of course, Codeium’s chat can also translate the solution into Java:

import java.util.*;

public class UnderstandBook {

public static List<Integer> topologicalSort(List<List<Integer>> graph, int[] inDegrees) {

Queue<Integer> q = new LinkedList<>();

List<Integer> order = new ArrayList<>();

// Initialize queue with nodes that have no incoming edges

for (int i = 0; i < inDegrees.length; i++) {

if (inDegrees[i] == 0) {

q.offer(i);

}

}

while (!q.isEmpty()) {

int node = q.poll();

order.add(node);

// Decrement in-degree of each neighbor and add to queue if in-degree becomes 0

for (int neighbor : graph.get(node)) {

inDegrees[neighbor]--;

if (inDegrees[neighbor] == 0) {

q.offer(neighbor);

}

}

}

// If there are still nodes with positive in-degree, there is a cycle and topological sort is not possible

if (order.size() != inDegrees.length) {

return null;

} else {

return order;

}

}

public static void readBook() {

Scanner scanner = new Scanner(System.in);

int t = scanner.nextInt();

for (int i = 0; i < t; i++) {

int n = scanner.nextInt();

// Build graph and in-degree array

List<List<Integer>> graph = new ArrayList<>();

for (int j = 0; j < n; j++) {

graph.add(new ArrayList<>());

}

int[] inDegrees = new int[n];

for (int j = 0; j < n; j++) {

int k = scanner.nextInt();

for (int l = 0; l < k; l++) {

int dep = scanner.nextInt() - 1;

graph.get(dep).add(j);

inDegrees[j]++;

}

}

// Perform topological sort

List<Integer> order = topologicalSort(graph, inDegrees);

if (order == null) {

System.out.println(-1);

continue;

}

// Compute longest path using dynamic programming

int[] dp = new int[n];

for (int node : order) {

for (int neighbor : graph.get(node)) {

dp[neighbor] = Math.max(dp[neighbor], dp[node] + 1);

}

}

System.out.println(Arrays.stream(dp).max().getAsInt() + 1);

}

}

public static void main(String[] args) {

readBook();

}

}

Its capabilities also extend to unit testing:

import unittest class TestReadBook(unittest.TestCase): def test_read_book(self): test_cases = [ (3, [(3, 0), (2, 1, 2), (1, 2), (2, 3, 1), (2, 2, 3)]), # Expected output: 1 2 -1 (2, [(3, 2, 1, 3), (2, 2, 3)]), # Expected output: 2 2 (1, [(5, 2, 1, 3, 4, 0), (3, 2, 5, 1), (1, 4), (1, 4), (1, 4)]) # Expected output: 1 ] expected = [1, 2, -1, 2, 2, 1] for i, (t, test_case) in enumerate(test_cases): with self.subTest(test_case=test_case): self.assertEqual(read_book(t, test_case), expected[i]) if __name__ == '__main__': unittest.main()

When it comes to security, Codeium states:

- You can opt out of code snippet telemetry at any time

- All communication is encrypted

- Never train generative models on private data

- Do not train models on repositories with non-permissive licenses like GPL

- This is a clear difference when compared to Microsoft Copilot

Pros:

- Ability to search project code

- Self-hosted VPC deployment option

Cons:

- None

Conclusions: AI Code Assistant Efficiency

AI code assistants are now in common use by developers who appreciate the ability to automate common tasks, generate code comments/documentation and generally avoid scut work. While there have been some concerns about the security of auto-generated code, the productivity gains outweigh the drawbacks. In fact, GitHub Copilot estimates that between 46% and 61% of code on GitHub is AI-generated.

Whether you prefer to call the code assistent from within your IDE, or whether you prefer to take suggestions from web-based chatbots, the results from our assessment show that many of the available AI coding solutions are good enough at this point in time to significantly increase your productivity.

Next Steps

The best way to get familiar with AI-assisted Python code generation is by downloading a free copy of ActiveState Python, which will be automatically installed into a virtual environment. This provides you with a sandbox for experimentation without impacting your existing projects/installations.

Read Similar Stories