DevOps (and DevSecOps) are often faced with a long queue of repetitive tasks to help deploy, monitor and secure their organization’s applications and services. As such, DevOps has embraced automation, which in 2023 means embracing Python.

No other language offers the flexibility and ease of use, as well as a wide range of open source libraries to make short work of repetitive tasks from configuration management to log parsing to deploying applications and infrastructure.

Key DevOps use cases include:

- Infrastructure as Code (IaC): Python can be used to define and provision infrastructure using tools like Terraform or AWS CloudFormation, allowing for version-controlled infrastructure deployment.

- Continuous Integration (CI): Python scripts can be integrated into CI/CD pipelines using tools like Jenkins, Travis CI or GitLab CI to perform build, test, and deployment tasks.

- Deployment: Python is used to create scripts for deploying applications to various environments, including virtual machines, containers (Docker), and serverless platforms.

- Monitoring and Alerting: Python can be used to create custom monitoring and alerting solutions, leveraging libraries like Prometheus and Grafana, or integrating with tools like Nagios and Zabbix.

- Log Analysis: Python scripts can parse and analyze log files, helping to identify issues and trends in application and system logs. A prime example is the ELK Stack (Elasticsearch, Logstash and Kibana), whose libraries can be integrated with Python.

- Configuration Management: Python can be used to manage configuration files and ensure consistency across servers using tools like Puppet, Chef or Ansible.

- Container Orchestration: Python scripts can interact with container orchestration platforms like Kubernetes to automate tasks such as scaling, deployment and resource management.

- Security: Python can be used for security-related tasks like vulnerability scanning, penetration testing and security automation, making it an essential tool for DevSecOps.

- Cloud Automation: Python has extensive libraries and SDKs for cloud providers like AWS, Azure and Google Cloud, enabling DevOps teams to automate cloud resource provisioning and management.

Let’s examine each use case in turn, and work through an example of how you can use Python to help automate each of them.

Install a Prebuilt Python DevOps Tools Environment

To follow along with the code in this article, you can download and install our pre-built Python Tools for DevOps environment, which contains:

- A version of Python 3.10.

- All the tools and libraries listed in this post (along with their dependencies) in a pre-built environment for Windows, Mac and Linux

In order to download this ready-to-use Python project, you will need to create a free ActiveState Platform account. Just use your GitHub credentials or your email address to register. Signing up is easy and it unlocks the ActiveState Platform’s many other dependency management benefits.

Windows users can install the Python Tools for DevOps runtime into a virtual environment by downloading and double-clicking on the installer. You can then activate the project by running the following command at a Command Prompt:

state activate --default Pizza-Team/Python-Tools-for-DevOpsFor Mac and Linux users, run the following to automatically download, install and activate the Python Tools for DevOps runtime in a virtual environment:

sh <(curl -q https://platform.www.activestate.com/dl/cli/359260522.1694811948_pdli01/install.sh) -c'state activate --default Pizza-Team/Python-Tools-for-DevOps'Best Python Packages for DevOps Use Cases

The Python ecosystem has an embarrassment of riches when it comes to libraries that can help automate DevOps tasks. So much so that Python is becoming an essential skill for DevOps engineers. The good news is that you don’t need to be an app developer to work with it. Instead, all you really need is the ability to modify and extend Python scripts in order to automate the majority of your day to day tasks.

To that end, each of the following use cases comes with an example script that you can modify to accomplish your tasks.

Deploying Infrastructure with Terraform

Terraform is typically used for Infrastructure as Code (IaC) and provisioning resources across various cloud platforms. While Terraform itself is not written in Python, it provides a Python SDK called “python-terraform” that allows you to interact with Terraform configurations using Python.

To deploy infrastructure using Terraform you’ll first need to create a Terraform configuration file (e.g., config.tf) that defines your infrastructure resources. Below is a sample Terraform configuration file that deploys a simple AWS EC2 instance by:

- Specifying the AWS provider and the desired region.

- You should replace “us-west-2” with your desired AWS region.

- Defining an AWS EC2 instance resource using the aws_instance block.

- You should replace the ami (Amazon Machine Image) and instance_type with values suitable for your use case.

- Assigning a name tag to the EC2 instance using the tags block.

- Defining an output variable called public_ip, which captures the public IP address of the created EC2 instance.

Note that you’ll need to have Terraform installed and configured with your AWS credentials before you can run the script.

# Define the AWS provider and region provider "aws" { region = "us-west-2" # Replace with your desired AWS region } # Define an AWS EC2 instance resource "aws_instance" "example" { ami = "ami-0c55b159cbfafe1f0" # Replace with your desired AMI instance_type = "t2.micro" # Replace with your desired instance type tags = { Name = "example-instance" } } # Output the public IP address of the EC2 instance output "public_ip" { value = aws_instance.example.public_ip }To use this Terraform configuration, save it to a .tf file (e.g., main.tf) and then run the following commands in the same directory as the configuration file:

- Initialize Terraform to download the necessary providers and modules:

terraform init - Plan the infrastructure changes to see what Terraform will create:

terraform plan - Apply the changes to create the infrastructure:

terraform apply

Terraform will prompt you to confirm the creation of the resources. Enter “yes” to proceed. Once the resources are created, you can access the public IP address of the EC2 instance using the terraform output command:

terraform output public_ip

Remember to replace the AMI, instance type, and other values in the configuration file to match your specific infrastructure requirements. Also, ensure that you have AWS credentials configured either through environment variables or AWS CLI configuration for Terraform to use.

Monitoring and Alerting with Grafana

Grafana is an excellent way to query, visualize, alert on, and explore your metrics, logs, and traces. DevOps users can create dashboards with panels, each representing specific metrics over a set time-frame. Because of the versatility of grafana’s dashboards, they can be customized to each project, as required.

To use Grafana for monitoring and alerting, you typically configure data sources, create dashboards, and set up alerting rules within Grafana’s web interface. However, you can also interact with Grafana programmatically using its API to automate all of these tasks. Below is a sample Python script that uses the Grafana API to create a dashboard and set up an alerting rule by:

- Defining functions to create a Grafana dashboard

- Creating an alerting rule using the Grafana API

Before using this script, make sure you have an API key or credentials to access the Grafana API.

import requests import json # Grafana API endpoint and API key GRAFANA_API_URL = "http://your-grafana-url/api" API_KEY = "your-api-key" # Define the dashboard JSON dashboard_json = { "dashboard": { "id": None, # Set to None to create a new dashboard "title": "My Monitoring Dashboard", "panels": [ { "title": "CPU Usage", "type": "graph", "targets": [ { "expr": "sum(my_metric)", "format": "time_series", "intervalFactor": 2, } ], } ], }, "folderId": 0, # Set to the folder ID where you want to save the dashboard "overwrite": True, # Set to True to overwrite an existing dashboard with the same title } # Create a new dashboard def create_dashboard(): headers = { "Authorization": f"Bearer {API_KEY}", "Content-Type": "application/json", } response = requests.post( f"{GRAFANA_API_URL}/dashboards/db", headers=headers, data=json.dumps(dashboard_json), ) if response.status_code == 200: print("Dashboard created successfully.") else: print(f"Failed to create dashboard. Status code: {response.status_code}") print(response.text) # Define the alerting rule JSON alert_rule_json = { "dashboardId": None, # Set to the ID of the dashboard you want to add the alert to "conditions": [ { "evaluator": { "params": [95], "type": "gt", }, "operator": { "type": "and", }, "query": { "params": ["A", "5m", "now"], }, "reducer": { "params": [], "type": "avg", }, "type": "query", } ], "name": "High CPU Usage Alert", "noDataState": "no_data", "notifications": [ { "uid": "notify-1", } ], "frequency": "30s", "handler": 1, "message": "", } # Create an alerting rule def create_alert_rule(): headers = { "Authorization": f"Bearer {API_KEY}", "Content-Type": "application/json", } response = requests.post( f"{GRAFANA_API_URL}/alerting/rules", headers=headers, data=json.dumps(alert_rule_json), ) if response.status_code == 200: print("Alerting rule created successfully.") else: print(f"Failed to create alerting rule. Status code: {response.status_code}") print(response.text) if __name__ == "__main__": create_dashboard() create_alert_rule()You’ll need to replace a number of placeholders in the above script, including:

- “your-grafana-url” with your grafana URL

- “your-api-key” with your API key

- Adjust the dashboard_json and alert_rule_json dictionaries to configure the dashboard and alerting rule according to your requirements.

After running the script, you should have a new grafana dashboard and an associated alerting rule set up in your grafana instance. For more information on using grafana, refer to the grafana documentation.

Deploying Applications with Ansible

Ansible is an open-source automation platform that uses Python as its underlying language. It’s widely used for configuration management, application deployment and task automation across a wide range of systems and cloud providers.

To deploy an application using Ansible requires the creation of Ansible Playbooks, which are YAML files that define a set of tasks to be executed on target hosts. Below is a simple example of an Ansible Playbook that deploys a basic web application to a target server.

The playbook assumes you have Ansible installed and configured to connect to your target host(s) using SSH keys, and:

- Specifies the name of the playbook and the target host(s) (target_server in this case).

- Make sure to replace target_server with the actual hostname or IP address of your target server.

- The become: yes line indicates that Ansible should use sudo or equivalent to execute commands with elevated privileges.

- Defines a series of tasks. In this example, we install Python and pip (adjust for your target OS), copy your application code to a specified directory, and ensure the web server (Apache2 in this case) is running.

- The handlers section defines a handler that gets triggered when the code is copied. It also restarts the web server to apply changes.

--- - name: Deploy Web Application hosts: target_server become: yes # Use 'sudo' to execute commands with elevated privileges tasks: - name: Install required packages apt: name: - python3 - python3-pip state: present # Ensure the packages are installed when: ansible_os_family == "Debian" # Adjust for your target OS - name: Copy application code to the server copy: src: /path/to/your/application/code dest: /opt/myapp # Destination directory on the target server notify: Restart Web Service - name: Ensure web server is running service: name: apache2 # Adjust for your web server (e.g., nginx) state: started become: yes handlers: - name: Restart Web Service service: name: apache2 # Adjust for your web server state: restartedTo run this playbook, save it to a file (e.g., deploy_app.yml) and then run the ansible-playbook command:

ansible-playbook deploy_app.yml

Log Analysis with the ELK Stack

Elasticsearch, Logstash and Kibana (more commonly referred to as the ELK stack) can be integrated using Python into a reporting tool that can collect and process data from multiple data sources. In this way, DevOps can create centralized logging in order to identify problems with the servers and/or applications they run.

- ElasticSearch is used for storing logs

- LogStash is used for both manipulating and storing logs

- Kibana is a visualization tool with a web GUI that can be hosted via Nginx or Apache

Implementing an ELK stack for log analysis involves several components and configurations, and it’s typically done using configuration files and a series of commands. Here’s a simplified example of how you can set up a basic ELK stack for log analysis using Python to demonstrate the process.

Please note that this is a simple example. In a production environment, you would also need to consider log shipping, security and scaling using far more complex configurations.

The first step is to create a Logstash Configuration File (e.g., logstash.conf) in Logstash’s configuration directory. This file defines how Logstash should process and send logs to Elasticsearch. Here’s a simple example:

input { beats { port => 5044 } } filter { grok { match => { "message" => "%{COMBINEDAPACHELOG}" } } } output { elasticsearch { hosts => ["elasticsearch:9200"] index => "logs-%{+YYYY.MM.dd}" } }This configuration:

- Listens for logs on port 5044

- Uses a Grok pattern to parse Apache log messages

- Sends them to Elasticsearch with a daily index pattern.

Now you can start ingesting logs into Elasticsearch using Python:

from elasticsearch import Elasticsearch import json es = Elasticsearch(hosts=["http://localhost:9200"]) log_data = { "message": "127.0.0.1 - - [10/Sep/2023:08:45:23 +0000] 'GET /index.html' 200 1234", } es.index(index="logs-2023.09.10", body=json.dumps(log_data))Replace log_data with your log entries.

Finally, you can access Kibana by opening a web browser and navigating to http://localhost:5601. Using this web GUI, you can proceed to create visualizations, dashboards, and perform log analysis.

Implementing an ELK stack is a significant undertaking, beyond the scope of this simple example, which is only meant to get you started. For more information on creating an ELK stack, refer to the Digital Ocean tutorial.

Configuration Management with Ansible

To use Ansible for configuration management you will need to create Ansible Playbooks that define the desired state of your systems, and then execute tasks to achieve that state. Below is a simple example of a Python script that uses Ansible to manage the configuration of a group of servers.

Before running the script, make sure you have Ansible configured to connect to your target servers using SSH keys. The following script:

- Defines the paths for the Ansible inventory file (inventory.ini) and playbook file (configure_servers.yml).

- Creates the Ansible playbook content as a YAML string. In this example, the playbook ensures that Apache2 is installed and the Apache2 service is running on the target servers.

- Creates the Ansible inventory file, listing the target servers under the [web_servers] group.

- Creates the Ansible playbook file (configure_servers.yml) and writes the playbook content to it.

- Runs the Ansible playbook using the subprocess.run function, specifying the playbook file and inventory file as arguments.

- Cleans up the temporary inventory and playbook files.

import os import subprocess # Define the Ansible inventory file and playbook file paths INVENTORY_FILE = "inventory.ini" PLAYBOOK_FILE = "configure_servers.yml" # Define the Ansible playbook YAML content PLAYBOOK_CONTENT = """ --- - name: Configure Servers hosts: web_servers become: yes # Use 'sudo' to execute tasks with elevated privileges tasks: - name: Ensure Apache2 is installed apt: name: apache2 state: present when: ansible_os_family == "Debian" # Adjust for your target OS - name: Ensure Apache2 service is running service: name: apache2 state: started """ # Create the Ansible inventory file with open(INVENTORY_FILE, "w") as inventory: inventory.write("[web_servers]\n") inventory.write("server1 ansible_ssh_host=server1.example.com\n") inventory.write("server2 ansible_ssh_host=server2.example.com\n") # Add more servers as needed # Create the Ansible playbook file with open(PLAYBOOK_FILE, "w") as playbook: playbook.write(PLAYBOOK_CONTENT) # Run the Ansible playbook subprocess.run(["ansible-playbook", PLAYBOOK_FILE, "-i", INVENTORY_FILE]) # Clean up temporary files os.remove(INVENTORY_FILE) os.remove(PLAYBOOK_FILE)Container Orchestration with Kubernetes

The Kubernetes Python client library, aka kubernetes.client is one of the easiest ways to interact with a Kubernetes cluster using Python.

The following is a simple example that demonstrates how you can interact with a Kubernetes cluster and perform basic container orchestration tasks. The script:

- Imports the necessary modules from the Kubernetes Python client library.

- Loads the Kubernetes configuration from the default config file (~/.kube/config).

- Creates a Kubernetes API client for the AppsV1 API group.

- Defines a simple Deployment object for an Nginx container with two replicas.

- Creates the Deployment, lists pods in the default namespace, and then deletes the Deployment.

from kubernetes import client, config # Load the Kubernetes configuration (e.g., from ~/.kube/config) config.load_kube_config() # Create a Kubernetes API client api_instance = client.AppsV1Api() # Define a Deployment object deployment = client.V1Deployment( api_version="apps/v1", kind="Deployment", metadata=client.V1ObjectMeta(name="sample-deployment"), spec=client.V1DeploymentSpec( replicas=2, selector=client.V1LabelSelector( match_labels={"app": "sample-app"} ), template=client.V1PodTemplateSpec( metadata=client.V1ObjectMeta(labels={"app": "sample-app"}), spec=client.V1PodSpec( containers=[ client.V1Container( name="sample-container", image="nginx:latest", ports=[client.V1ContainerPort(container_port=80)], ) ] ), ), ), ) try: # Create the Deployment api_instance.create_namespaced_deployment( namespace="default", body=deployment ) print("Deployment created successfully.") # List pods in the default namespace pods = api_instance.list_namespaced_pod(namespace="default") print("List of Pods in the default namespace:") for pod in pods.items: print(f"Pod Name: {pod.metadata.name}") # Delete the Deployment api_instance.delete_namespaced_deployment( name="sample-deployment", namespace="default" ) print("Deployment deleted successfully.") except Exception as e: print(f"Error: {str(e)}")Ensure that you have proper access and permissions to interact with your cluster. You’ll also want to adjust the script according to your specific requirements. For more information on other API functionality you can take advantage of, refer to the Kubernetes documentation.

Network Security with Scapy

Scapy is a powerful packet manipulation tool that allows you to create, send, capture and analyze network packets. It’s often used for network security tasks like packet crafting and network scanning.

The following script uses Scapy for network scanning and packet analysis, and performs a basic port scan. As long as you’ve provided the appropriate permissions and authorization to perform network scanning activities, you can use the script to:

- Import Scapy’s functionalities.

- Define the target IP address range to scan (target_ip), as well as the range of ports (start_port to end_port) that must be scanned.

- Initialize an empty list called open_ports to store the ports that are found to be open.

- Use a “for” loop to iterate through the specified port range, and create a TCP SYN packet for each port.

- Send the SYN packet to the target IP address and port, and then wait for a response using the sr1 function.

- If a response is received and the TCP flags in the response packet indicate that the port is open (SYN-ACK), we add the port number to the open_ports list and print a message.

- Print the list of open ports.

from scapy.all import * # Define the target IP address range target_ip = "192.168.1.1/24" # Define the range of ports to scan start_port = 1 end_port = 1024 # Initialize a list to store open ports open_ports = [] # Perform a port scan using Scapy for port in range(start_port, end_port + 1): # Create a TCP SYN packet packet = IP(dst=target_ip) / TCP(sport=RandShort(), dport=port, flags="S") # Send the packet and receive the response response = sr1(packet, timeout=1, verbose=0) # Check if a response was received if response is not None: # Check if the response has the TCP flag set to SYN-ACK (indicating an open port) if response.haslayer(TCP) and response.getlayer(TCP).flags == 0x12: open_ports.append(port) print(f"Port {port} is open.") # Print the list of open ports if open_ports: print("Open ports:", open_ports) else: print("No open ports found.")The above code provides a basic example of how to use Scapy for network port scanning, but you can also use Scapy to perform more advanced scanning techniques. For more information, read the Scapy docs.

Cloud Automation for AWS with Boto3

Boto3 is the Python SDK for Amazon Web Services (AWS). It allows you to interact with AWS services programmatically, making it an essential tool for automating AWS-related tasks.

The following Python code uses boto3 to perform basic AWS automation tasks, such as listing EC2 instances and creating an S3 bucket. As long as you have your AWS credentials properly configured and your AWS region designated, you can use the script to:

- Import the boto3 library and initialize AWS clients for EC2 and S3 in the desired AWS region.

- Employ the list_ec2_instances function, which uses the describe_instances method to list all EC2 instances in the region and prints their instance IDs and states.

- Use the create_s3_bucket function to create an S3 bucket with the specified name using the create_bucket method.

- Make sure to replace “my-unique-bucket-name” with your desired bucket name.

- Finally, in the if __name__ == “__main__”: block, we call the functions to list EC2 instances and create an S3 bucket.

import boto3 # Initialize Boto3 AWS clients ec2_client = boto3.client('ec2', region_name='us-east-1') # Replace 'us-east-1' with your desired AWS region s3_client = boto3.client('s3', region_name='us-east-1') # Replace 'us-east-1' with your desired AWS region # List EC2 instances def list_ec2_instances(): response = ec2_client.describe_instances() for reservation in response['Reservations']: for instance in reservation['Instances']: print(f"Instance ID: {instance['InstanceId']}, State: {instance['State']['Name']}") # Create an S3 bucket def create_s3_bucket(bucket_name): try: s3_client.create_bucket(Bucket=bucket_name) print(f"S3 bucket '{bucket_name}' created successfully.") except Exception as e: print(f"Error creating S3 bucket: {str(e)}") if __name__ == "__main__": # List EC2 instances print("Listing EC2 instances:") list_ec2_instances() # Create an S3 bucket bucket_name = "my-unique-bucket-name" # Replace with your desired bucket name print(f"Creating S3 bucket '{bucket_name}':") create_s3_bucket(bucket_name)The above code provides a basic example of using Boto3 for AWS cloud automation. Depending on your use case, you can extend it to perform more advanced tasks and operations with various AWS services. For more information, refer to our How to Drive your Cloud Implementation using Python blog.

Conclusions – Python for DevOps

A DevOp’s job is never done, which is why automation is key. Python has become the go to language for automating tasks in general, and DevOps tasks in particular from infrastructure provisioning to API-driven deployments to CI/CD workflows. No matter the use case, the refrain is always the same: “there’s a Python tool for that.”

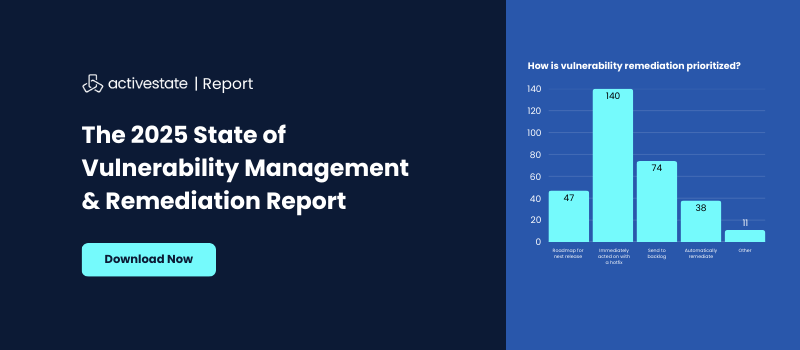

Key to the success of any DevOps team is the ability to quickly create and implement scripts in a consistent manner. Using the ActiveState Platform to create and share your Python environment with your team is the best way to ensure consistency no matter what OS they use.

- Specifying the AWS provider and the desired region.

Next Steps:

Create a free ActiveState account and see how easy it is to generate a chain of custody for your software supply chain