- Manual experimentation, which can be viable when implementing easy algorithms like GridSearch or RandomSearch

- Use specialized libraries that are compatible with multiple Machine Learning (ML) frameworks, which makes the task far more scalable, saving time and effort.

Let’s check out some of the most interesting Python libraries that can help you achieve model hyperparameter optimization.

1. The Bayesian-Optimization Library

The bayesian-optimization library takes black box functions and:

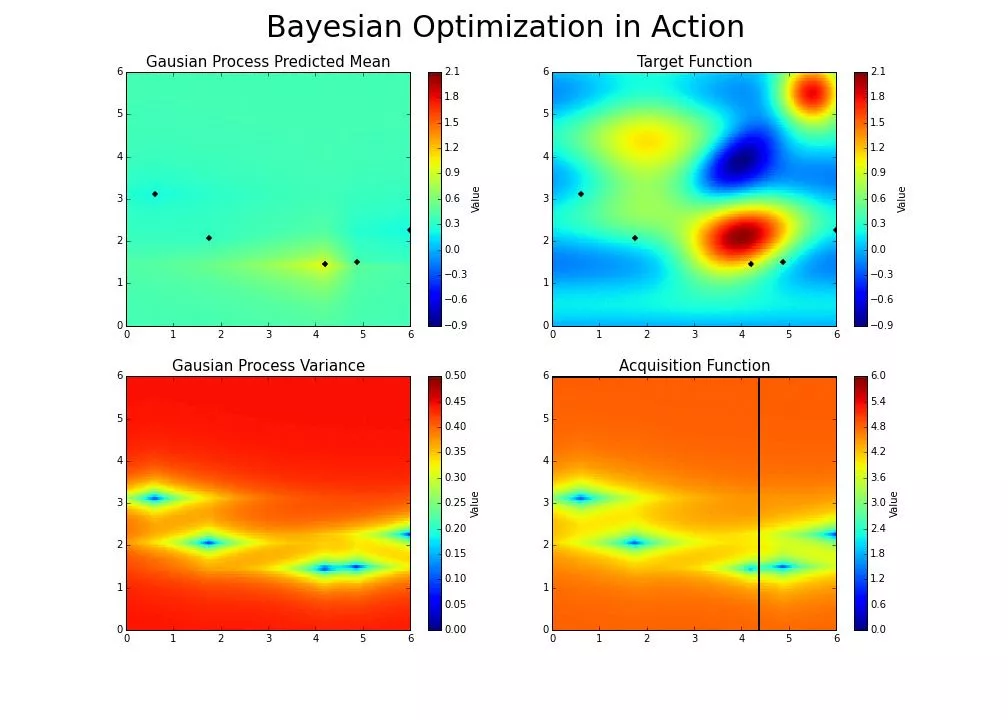

- Optimizes them by creating a Gaussian process

- Balances the exploration in the search space, as well as the exploitation of results obtained from previous iterations.

- Allows you to dynamically pan and zoom the bounds of the problem to improve convergence.

Another interesting feature is the implementation of the observer pattern to save the optimization progress as a JSON object that can be loaded later to instantiate a new optimization. This way, partial searches can be performed and then easily resumed.

The following image shows the evolution of an optimization done using the bayesian-optimization library:

Exploration and Exploitation with the Bayesian-Optimization Library

Be aware that this package unfortunately doesn’t integrate with any common ML frameworks or libraries, which limits the scope of the hyperparameter optimization use cases it can address. In addition, it doesn’t support parallel or distributed workloads, which can impact its usefulness in some contexts.

2. Scikit-Optimize

When working with a large number of parameters, the base algorithms of GridSearch and RandomSearch for hyperparameter tuning provided by the popular scikit-learn toolkit are not efficient. Instead, try working with the scikit-optimize library (also known as skopt), which uses a Bayesian optimization approach.

Skopt can be used as a drop-in replacement for the GridSearchCV original optimizer that supports several models with different search spaces and numbers of evaluations (per model class) to be optimized.

Skopt also includes utilities for comparing and visualizing the partial results of distinct optimization algorithms, which makes it a great companion to the standard scikit-learn modeling workflow. However, its narrow scope leaves out other ML frameworks, which is its main drawback.

3. GPyOpt

The University of Sheffield developed GPyOpt, which is an optimization library that uses the Bayesian optimization approach implemented over Gaussian process modeling based on GPy (another of the University’s libraries).

Like scikit-optimize, this tool can tune scikit-learn models out of the box, but it doesn’t provide drop-in replacement methods. Instead, you have to use GPyOpt objects to optimize each model independently. The good news is that GPyOpt can parallelize a task to use all of the local processor cores that are available, which is a nice addition (especially when working with large search spaces).

GPyOpt also comes with other goodies to optimize hyperparameters. It has a notable capacity to use different cost evaluation functions, but as with scikit-optimize, its narrow integration support for modeling libraries is a major drawback.

4. Hyperopt

Hyperopt is a distributed hyperparameter optimization library that implements three optimization algorithms:

- RandomSearch

- Tree-Structured Parzen Estimators (TPEs)

- Adaptive TPEs

Eventually, Hyperopt will include the ability to optimize using Bayesian algorithms through Gaussian processes, but that capability has yet to be implemented. In the meantime, Hyperopt’s strongest selling point is its optional capability to distribute its optimization via Apache Spark or MongoDB clusters (which might also be a red flag).

It’s also extremely agnostic, being able to integrate seamlessly with:

- Scikit-learn via Hyperopt-sklearn

- Neural networks via Hyperopt-nnet

- Keras via Hyperas

- Theano’s convolutional networks via Hyperopt-convnet

Thus, with this series of extensions, there is a wide spectrum of applications for Hyperopt.

5. SHERPA

The SHERPA optimization library includes implementations of the common grid and random search algorithms, along with GPyOpt Bayesian optimization based on Gaussian process models and the Asynchronous Successive Halving Algorithm (ASHA), among others.

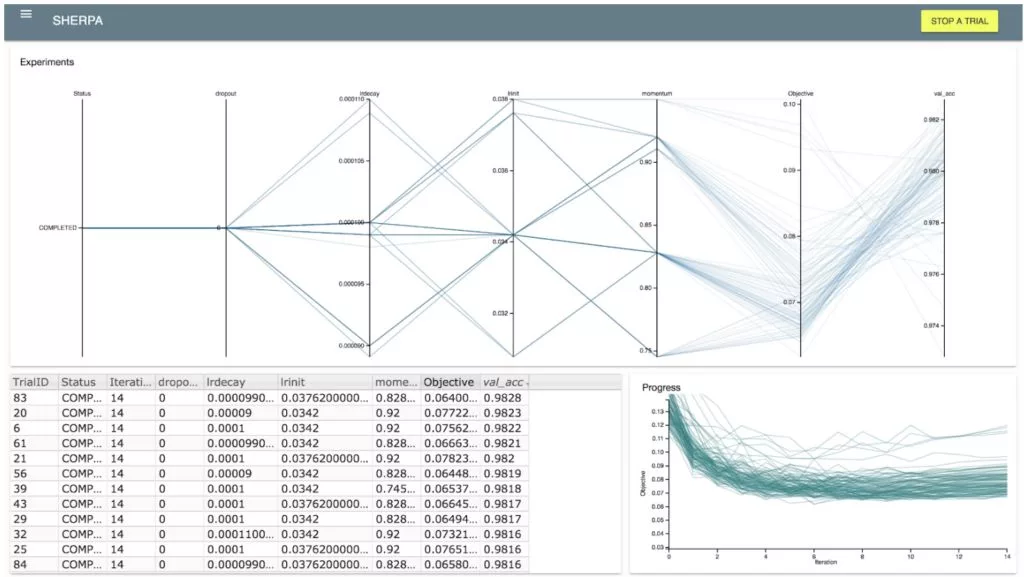

SHERPA is more comprehensive than other alternatives, and it also includes a WebUI to support optimization tasks:

SHERPA’s Dashboard Showing the Local Search Algorithm Optimization Results

SHERPA naturally integrates with Keras, and also supports parallel optimization out of the box via its integration with MongoDB. However, this dependency might be seen as an area that needs improvement, given the extra work required for cluster administration. Integration with other frameworks for ML might also be useful.

6. Optuna

Optuna takes an interesting approach to hyperparameter optimization, using the imperative define-by-run user API. This means that calculations are not executed on the fly, but rather it dynamically constructs the search spaces for the hyperparameters on the fly.

Search algorithms include:

- Classic grid/random searches

- Tree-Structured Parzen Estimators (TPEs)

- Partially fixed hyperparameters

- A quasi-Monte Carlo sampling algorithm

Optuna also includes pruning algorithms to stop searching through unpromising branches of the search space.

Parallelization of optimization tasks is done via integration with relational databases like MySQL and the visualization of optimization history. Hyperparameter importance and supplementary materials are also available through the Web dashboard:

Optuna integrates with both the PyTorch and FastAI ML frameworks. Its lack of integration with other common ML frameworks might be its only drawback.

7. Ray Tune

The Ray Tune (ray) unified framework for ML is a scalable set of utilities that cover three main use cases:

- Scaling ML Workloads: Ray Tune includes libraries for processing data, training models and reinforcement learning (and more) in a distributed way.

- Building Distributed Applications: When using Ray Tune’s parallelization API you don’t need to change your code to run it on a machine cluster.

- Deploying Large-scale Workloads: Ray Tune’s cluster manager is compatible with Kubenetes, YARN, and Slurm clusters that can be deployed on major public cloud providers.

These hyperparameter optimization capabilities are provided via integration with several implementations from popular libraries, including:

- Bayesian-optimization

- Scikit-optimize

- Hyperopt

- Optuna

In addition, Ray Tune supports integration with all major ML modeling frameworks, including PyTorch, TensorFlow, scikit-learn, Keras, and XGBoost.

Ray Tune is part of an ML/AI-oriented tool ecosystem that includes well-established tools like spaCy, Hugging Face, Ludwig, and Dask.

8. Microsoft’s NNI

Microsoft has developed an AutoML solution called Neural Network Intelligence (NNI) which provides a full set of hyperparameter tuning options, including:

- Algorithms for exhaustive searches (grid and random searches)

- Heuristic searches (anneal, evolutionary, and hyperband searches)

- Bayesian optimization (BOHB, DNGO, and Metis, among others)

The suite is compatible with several ML frameworks (including PyTorch, TensorFlow, scikit-learn, and Caffe2), and can be used as a Command Line Interface (CLI) or a very powerful WebUI.

Complementary tasks such as experiment management and the capacity to run NNI in several Kubernetes-based services in parallel make NNI a great choice for tuning almost any model.

Microsoft NNI’s WebUI

9. MLMachine

MLMachine is another package that implements the Bayesian optimization method. It is simple to use and focuses on organizing ML workflows in Jupyter Notebooks. It can be used to accelerate many common tasks, including:

- Exploratory data analysis

- K-fold auto-encoding (for categorical data)

- Feature selection

To perform Bayesian analysis, MLMachine supports many estimators in a single optimization call and also includes model performance and parameter evolution visualizations. For example:

Results from Hyperparameter Optimization with MLMachine

The main drawback of MLMachine is that it is not designed to run and/or parallelize optimizations, or use GPU clusters by default, which can be problematic for large space problems.

10. Talos

PyTorch, TensorFlow, and Keras constitute three of the most widely-used libraries for developing solutions for computer vision and language processing (among other tasks). Talos builds on top of these to offer more than 30 utilities for performing hyperparameter optimization.

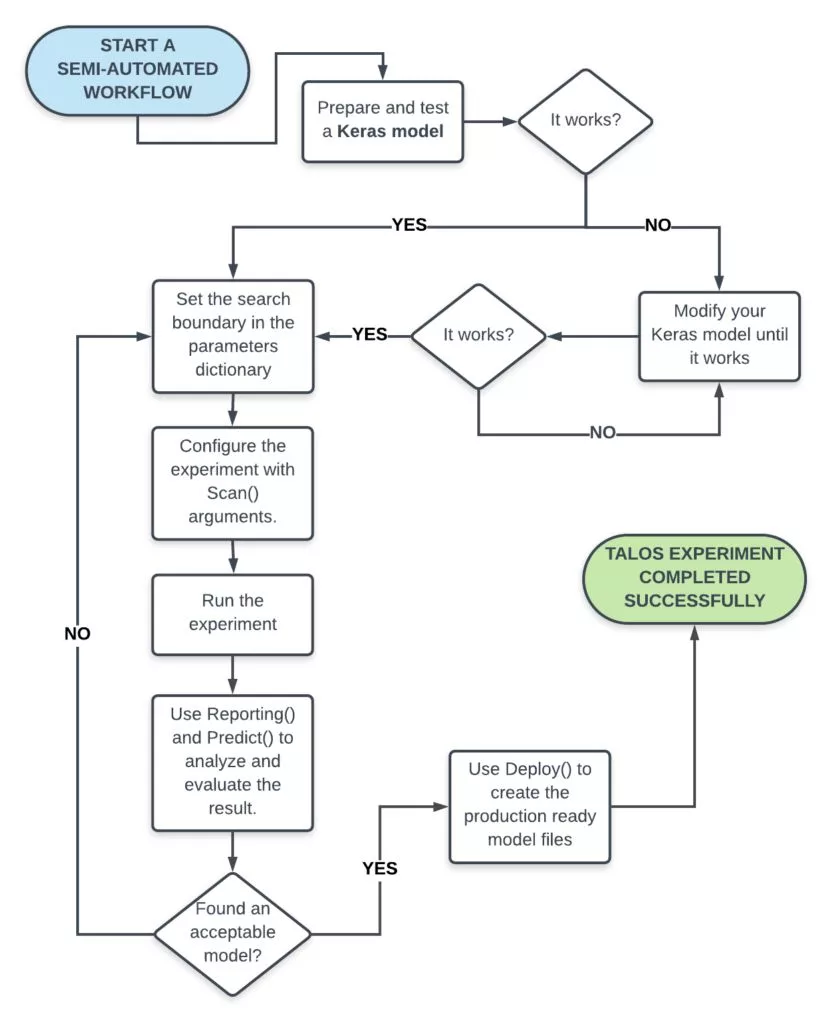

The following diagram shows a semi-automated workflow for integrating Talos with Keras to quickly get you from zero to a production-ready model:

The Talos Workflow

Talos can also be used for AutoML modeling. In this case, the only requirement is that you split the dataset for training and validation. The AutoModel, AutoParams, and AutoScan functions provide a super simple approach to almost any ML task.

When it comes to the specific use case of hyperparameter optimization, Talos provides some features that really set it apart from the competition. In particular:

- Probabilistic optimizers

- Pseudo, quasi, and quantum random search options

- The use of deep learning as a way to optimize deep learning processes

All of which make Talos an excellent tool of choice.

Conclusions – Python’s Hyperparameter Optimization Tools Ranked

Searching for the appropriate combination of hyperparameters can be a daunting task, given the large search space that’s usually involved.

While I’ve numbered each of these tools from 1 to 10, the numbering doesn’t reflect a “best to worst” ranking. Instead, you’ll need to choose the best tool for your particular project based on its capabilities, strengths and drawbacks. The tools in this list offer:

- Non-invasive options

- Tools that can be fully integrated into modeling tasks

- AutoML approaches to hyperparameter optimization

One of them is sure to be right for your needs.

Next steps:

Test out the tools in this blog by installing our Hyperparameter Optimization Tools Python environment for Windows, Mac & Linux, which includes a recent version of Python and most of the tools reviewed in this article.

Read Similar Stories